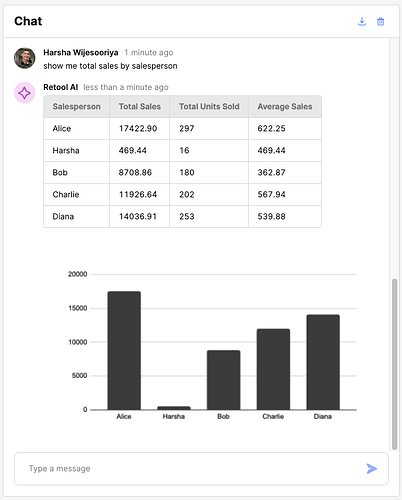

After hours of experiment, this is the Eureka moment for me.

Wow - how did you do that?!

I am using Javascript and OpenAI's Assistants API to push these kinds of stuff to Retool AI chat

Didn't realize you could push a chart into the chat, is that native or are you doing something to visualize the results that are returned from the API?

well that's neat!! so is this the response from OpenAI or are you post-processing it somehow before it displays?

We have to send a request to either OpenAI Code Interpreter and get a Chart image as a response or I just found out we can even use Quickcharts.io to do it much faster. This demo is using Quick charts https://youtu.be/6QWdfnqHmPo

I don't do any post-processing. I got the Retool AI Chat component to listen to the API response before it shows the results on the chat window.

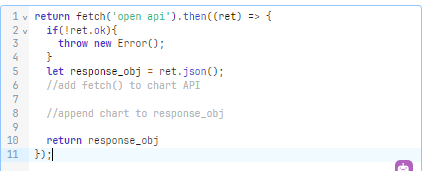

oh, so you use Transform results to add the chart? not sure how you'd use the Code Interpreter there though (a fetch() call maybe?)... i don't think the Success/Failure events would work.

I am using the OpenAI Assistants API to be clear. Right now I have added a delay to get the response without really checking the run status. But I will add that also to the JS Query down the line.

I use Curl requests inside my JS Queries

https://platform.openai.com/docs/assistants/overview

ya I use the same API in a custom component so we could do a few things not supported with the Chat Component. Which is where I'm getting a bit confused on how you did this because the Chat Component handles sending off the message and displaying the response so there isn't a need to use the API directly.

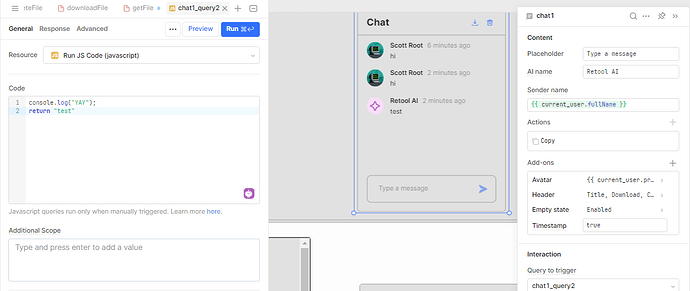

ok I may have just had the same "AH HA" moment as you while typing this out and had to try it. I noticed the 'Query to trigger' property only lets you select a query using the Retool AI resource... I just found that you can force it to use a different type of query though. If you set a query to Retool AI and save it, then select the query for 'Query to trigger' and finally go back to the query and change the type to 'Run JS Code' it now uses an 'unsupported' query type successfully and anything the query returns is used as the AI Response:

I'm guessing this is what you discovered?? this would let you make those fetch/curl requests to the OpenAI API then to the chart API before the response is displayed

rather nifty if this is what you discovered to do this!

Your approach is also correct. I have found a better hack than that. I used the Watched Inputs section of the Retool AI to handle the Input and the System Message parameters. This gives me more flexibility to do various things outside the Retool AI and just ingest the final results to the two parameters I mentioned.

This is really cool! Can you explain a bit more how you set this up? I have a model set up with Function Calling deployed to an endpoint. Pretty much I want the user's input to go to my endpoint instead and show the results but still keeping the Retool Chat component as it's pretty neat for conversations.

Any ideas?

Do you have a similar post that describes the basics of getting Retool AI to respond to prompts about your internal data?

Super cool stuff!

I also wanted to call out the "AI Chat" template, which gives you a chat UI built off of a list view. The Chat component is great for getting started quickly, but it can never be everything to everyone, and I've found that if I want to render charts & other artifacts within the chat, it's nice to have the flexibility of something composed from other components. Quick demo of the template.

@awilly, to your question, using something like the above allows you to build "agentic workflows" to better interact with your data. Here's an overview of a similar tool I made: [Reagent] Orchestrator | Retool - 5 December 2024 | Loom.

I recorded this a few weeks ago, and I don't go into depth on the data piece. Would people be interested in a blog post or longer write-up on the best ways to build these tools in Retool? I'm happy to put something together.

Super cool! A blog post on this would be helpful. Thank you.

Working on a blog post, but here's a webinar we did last week that goes more into detail on these topics: https://www.youtube.com/watch?v=e7Bs5qiDqpk&ab_channel=Retool