Hi Retool community,

I'm encountering an issue with routing Retool AI's requests through Helicone for LLM monitoring. The exact same approach that I used for proxying OpenAI requests is not working for Anthropic requests.

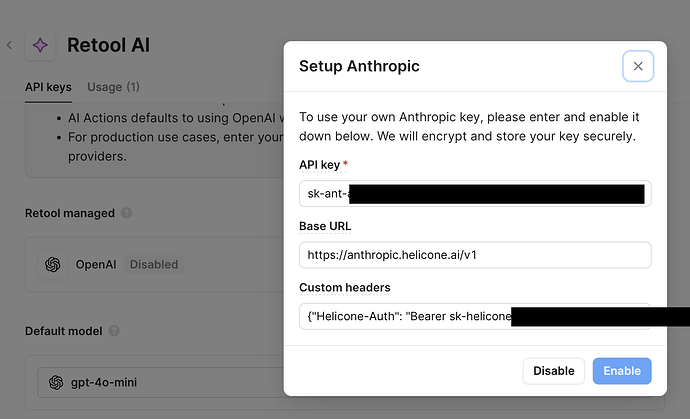

Despite updating the base URL in my Retool AI Resource configuration (see screenshot), requests are still being routed through the default endpoint.

Here's what I've tried so far:

- Updated the base URL field to point to Helicone's endpoint

- Attempted to set up a custom provider as an alternative solution. This does proxy, but it consistently returns 404s

- Verified my API keys and model configurations are correct

Any guidance on either the base URL override or custom provider setup would be greatly appreciated.

Is this potentially a bug?

Thanks in advance,

Hamilton