Thanks for taking the time to think this through and post a video!

I thought I would explain my solution to what I was trying to achieve.

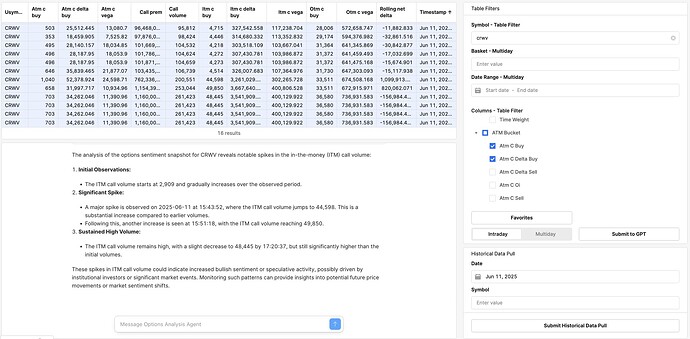

In a nutshell, I have a data-heavy app that I'd like to use AI to analyze. I take snapshots of live API data throughout the day, add them to a database (keeping all data as new rows throughout the day) and I want my agent to look at a filtered version of that data and point out interesting or unusual changes. I do not have a software development or coding background and have relied heavily on Chat GPT for that part but I am good at engineering simple solutions to complex problems.

The idea was to pass the filtered data along with a user message to the agent and receive a response based on the agent's analysis of the data.

First, I tried using the raw database table and filtering it by connecting app components to the agent tool/function logic editor but that didn't work.

Then I thought, I'll add a table to the app that the agent will analyze. This way I can filter in the app, view the filtered results and then pass this along with my question to the agent. The issue here was passing this table AND the user prompt to the agent. I tried workflows, the function editor and neither would allow me to pass an app component to the agent WITH the user message.

The last idea was to capture the filtered app table in a "temporary" database table and send that to the agent first – sort of priming the agent – then ask it a question.

Here's what I did:

The agent query is simply {{ options_analysis_agentChat1.agentInputs }}

The user filters data in the app and clicks a submit button. The submit button runs a script that pulls the data and populates the app table.

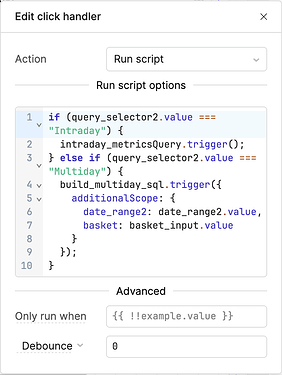

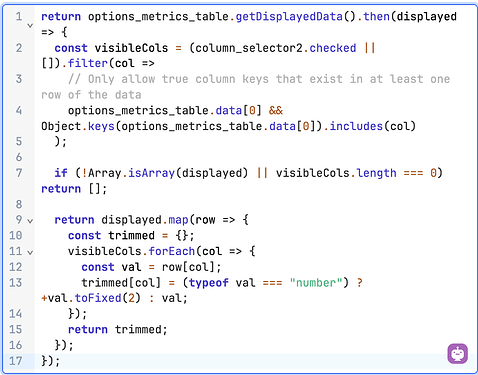

As you can see based on a certain selection of a toggle switch component, one of two queries are run. Those then trigger a JS query that captures the data:

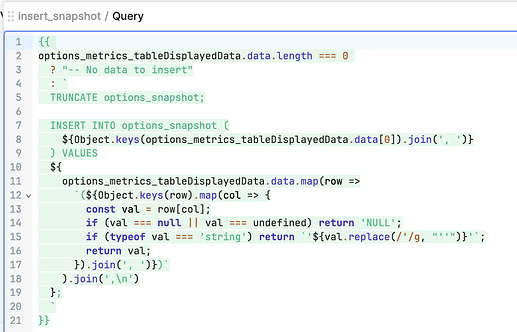

That query then triggers a SQL query that inserts the snapshot into the database:

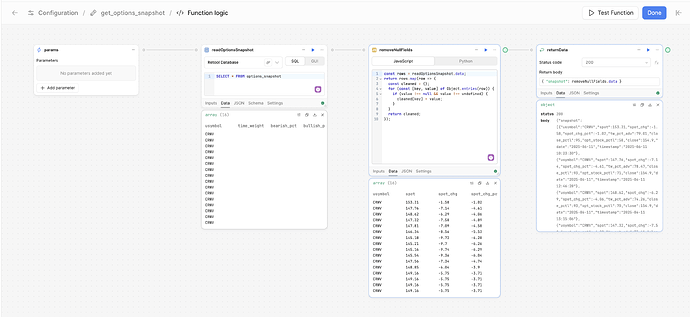

Then in the agent tool editor I created a new tool with a few components. First I read the table, then I clean the table (remove nulls) and last I return the data. You can see in the second block there are missing/null columns. The third block cleans the data, removing nulls and the fourth formats it for GPT:

Functionally, in the app a user applies filtering and hits submit and that triggers the priming of the agent. Then the user types a question into the agent component and receives a response based on the displayed data. Additionally, I can pull data from any database table or create new ones in app that I can analyze with the agent in seconds. Of course, the schema needs to be identical between all tables but that's built into my API pull. See, I have limited number of pulls per day so I pull everything I'd need in one call thus, every table has an identical schema. The filtering creates hundreds of null columns but I clean that up before the agent sees it which dramatically reduces token usage. My first semi- successful attempt exceeded the token limit by over 100k!!

It wasn't easy to figure out but the solution is very efficiently orchestrated.

Here's a shot of the GPT analysis page of the app.

The next step – the fun part – will be crafting an instruction prompt that dials in the analysis! Right now the response is very surface level but I will make it more nuanced with time.