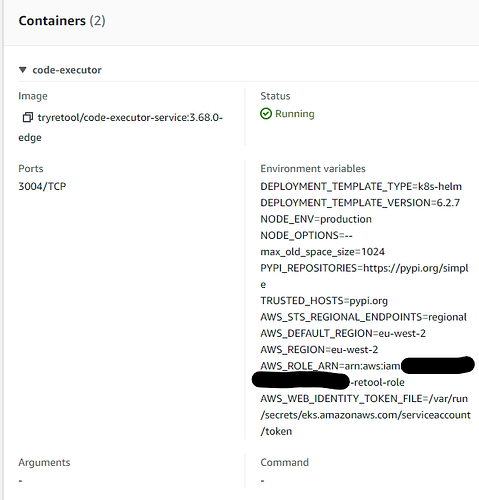

We're keen to use custom Python libraries in our workflows, but I've noticed that nsjail (which the functionality depends on) is failing to launch in our containers:

Running code executor container in privileged mode.

iptables: Disallowing link-local addresses

{"level":"info","message":"Not configuring StatsD...","timestamp":"2024-07-13T10:11:42.432Z"}

{"level":"info","message":"Installing request logging middleware...","timestamp":"2024-07-13T10:11:42.438Z"}

{"level":"info","message":"Checking if nsjail can be used","timestamp":"2024-07-13T10:11:42.439Z"}

{"level":"info","message":"Populating environment cache","timestamp":"2024-07-13T10:11:42.442Z"}

{"dd":{"env":"production","service":"code_executor_service","span_id":"7468107029411779173","trace_id":"7468107029411779173"},"level":"info","message":"Done populating","timestamp":"2024-07-13T10:11:42.449Z"}

{"level":"info","message":"Starting code executor on port 3004","timestamp":"2024-07-13T10:11:42.451Z"}

{"dd":{"env":"production","service":"code_executor_service","span_id":"3091449606178479480","trace_id":"678313442480844015"},"level":"error","message":"[E][2024-07-13T10:11:42+0000][24] standaloneMode():275 Couldn't launch the child process","timestamp":"2024-07-13T10:11:42.482Z"}

{"dd":{"env":"production","service":"code_executor_service","span_id":"678313442480844015","trace_id":"678313442480844015"},"level":"info","message":"cleaning up job - /tmp/jobs/83f43ad1-c96b-43f1-9936-dc3c266fb546","timestamp":"2024-07-13T10:11:42.485Z"}

{"dd":{"env":"production","service":"code_executor_service","span_id":"678313442480844015","trace_id":"678313442480844015"},"level":"error","message":"[E][2024-07-13T10:11:42+0000][24] standaloneMode():275 Couldn't launch the child process","timestamp":"2024-07-13T10:11:42.489Z"}

{"level":"info","message":"can use nsjail: false","timestamp":"2024-07-13T10:11:42.490Z"}

We are using the helm chart (v 6.2.5) to deploy Retool into our EKS environment (EC2, not Fargate), and other than this we have been using the platform without issue.

Our nodes are running BOTTLEROCKET_x86_64.

Are there any other settings that we need to be aware of to get this working?