Hello

this is my first post after 2 weeks trying to build my workflow.

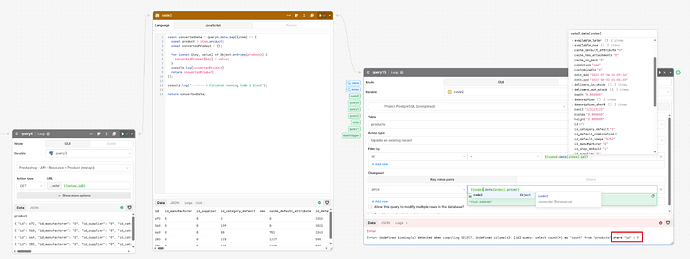

My data is coming from an API request in a loop which works fine.

Then i need to prepare for an SQL update (an existing record) which also runs in a loop.

However I am getting this error:

Error: Undefined binding(s) detected when compiling SELECT. Undefined column(s)" [id] query: select count(*) as "count" from "products" where "id" = ?

However as you can see from the attached screenshot the {{code2.data[index].id}} does have a value.

What is wrong with my setup?

1 Like

I have exactly the same issue and it is SOO frustrating. I'm hoping if it's a bug that RT can fix it quickly, or if there's another way to do this I need to know asap.

1 Like

Hi @Joanna_Wu

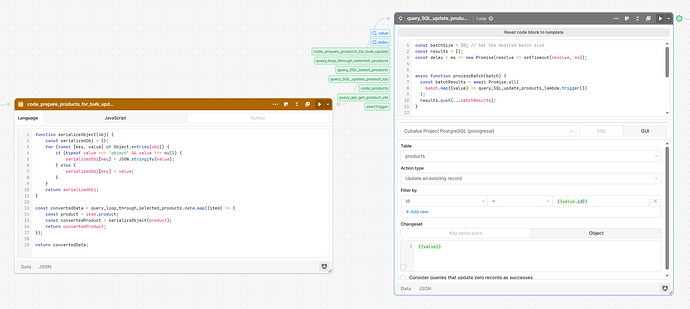

this is what i ended-up doing:

and it works OK for me.

Here's the code for it:

code_prepare_products_for_bulk_update

function serializeObject(obj) {

const serializedObj = {};

for (const [key, value] of Object.entries(obj)) {

if (typeof value === 'object' && value !== null) {

serializedObj[key] = JSON.stringify(value);

} else {

serializedObj[key] = value;

}

}

return serializedObj;

}

const convertedData = query_loop_through_selected_products.data.map((item) => {

const product = item.product;

const convertedProduct = serializeObject(product);

return convertedProduct;

});

return convertedData;

The above code will also handle complex JSON objects if they do exist as values in any of the key:value pairs, and therefore will stringify it.

query_SQL_update_products

const batchSize = 35; // Set the desired batch size

const results = [];

const delay = ms => new Promise(resolve => setTimeout(resolve, ms));

async function processBatch(batch) {

const batchResults = await Promise.all(

batch.map((value) => query_SQL_update_products_lambda.trigger())

);

results.push(...batchResults);

}

async function processElements() {

const data = code_prepare_products_for_bulk_update.data;

for (let i = 0; i < data.length; i += batchSize) {

const batch = data.slice(i, i + batchSize);

await processBatch(batch);

await delay(1000); // Introduce a 2-second delay before the next batch

}

return results;

}

return processElements();

The loop block will also do the single record updates in batches, so you can also fine-tune the batch-size and delay between updates.

Hey folks!

Sorry about the late reply here, it looks like this is a bug - I've gone ahead and filed it with the dev team and can let you know here when there's a fix.

Thanks for providing such a detailed workaround here @Periklis_Vasileiou!

Out of curiosity, if you set the changeset as an "Object" instead of using "Key value pairs" does the query work correctly?

Hi @Kabirdas

thanx for replying, I thought I was doing things wrong and that was the reason you did not reply, nevertheless, your feedback is valuable, and now I need to get back to this approach so I can comment further.

Considering the need to make a working solution, I am not bothered at all to go back and work on the former solution, only because you confirm (and I need this confirmation) that there is a bug and it is not me that I am trying to do something to work but in the wrong way, therefore your feedback is really valuable.

I will try to test your suggestion, however, I do believe I tried that also with the same results. To my understanding, this is OK meaning that if there is a bug, it is consistent and either solutions, key-value pairs, or object behave the same way and produce the same error. To me, this is better than having 2 different errors.

Bottom line, I will try again, what I reported, with key-value pairs and object just to confirm what I experienced.

In any way, I hope I am not wasting your time and I am reporting real bugs (when I sse one)

Not at all! I'm just a bit backlogged at the moment so I've been taking a little longer to get to threads. I'm happy to help in cases where something isn't configured correctly too

Thanks for reporting the bug, it helps reproduce the behavior someone was reporting in another thread. Let me know what you find!