The JSON data object I try to insert/update in the loop looks like this:

JSON DATA

[

{

"Ort": "Vitoria",

"PLZ": "01015",

"Sel4": false,

"PfOrt": "",

"EMail2": "augusto@forwardergroup.com",

"Na1": "Firma",

"Sel5Kz": false,

"LandBez": "Spanien",

"Tel": "+3494555123",

"EMail1": "beatriz@forwardergroup.com",

"AspNr": 0,

"ErstBzr": "LS",

"Verteiler": 0,

"ID": 7241,

"StdLiKz": true,

"PfPLZ": "",

"Sel3": 0,

"AendBzr": "EK",

"PLZOrtInfo": "E-01015 Vitoria",

"Art": 0,

"LtzAend": "03.07.2020 14:18:49",

"Namen": "Firma FORWARDER Group, SL",

"Sel1": false,

"ErstDat": "01.09.2016 14:58:59",

"AnsNr": 0,

"LcManuellKz": false,

"StdReKz": true,

"Str": "Mendigorritxu, 12",

"Land": 724,

"LandKennz": "E",

"TelAbglManuellKz": false,

"IDString": "Adr.7040.7241",

"Sel2": false,

"AdrNr": "74464",

"Fax": "+3455598765",

"AendDat": "03.07.2020 14:18:49",

"Na2": "FORWARDER Group, SL",

"InfoKz": false,

"PLZInfo": "E-01015"

},

{

"Ort": "Přeštice",

"PLZ": "33401",

"Sel4": false,

"PfOrt": "",

"EMail2": "tereza@forwarder.com",

"Na1": "Firma",

"Sel5Kz": false,

"LandBez": "Tschechische Republik",

"Tel": "+42044555123",

"EMail1": "info@forwarder.com",

"AspNr": 0,

"ErstBzr": "LS",

"Verteiler": 0,

"ID": 7242,

"StdLiKz": true,

"PfPLZ": "",

"Sel3": 0,

"AendBzr": "EK",

"PLZOrtInfo": "CZ-33401 Přeštice",

"Art": 0,

"LtzAend": "03.07.2020 14:18:37",

"Namen": "Firma International Forwarder",

"Sel1": false,

"ErstDat": "01.09.2016 15:03:06",

"AnsNr": 0,

"LcManuellKz": false,

"StdReKz": true,

"Str": "Hlávkova 54",

"Land": 203,

"LandKennz": "CZ",

"TelAbglManuellKz": false,

"IDString": "Adr.7041.7242",

"Sel2": false,

"AdrNr": "74465",

"Fax": "+4204875467",

"AendDat": "03.07.2020 14:18:37",

"Na2": "International Forwarder",

"InfoKz": false,

"PLZInfo": "CZ-33401"

}

]

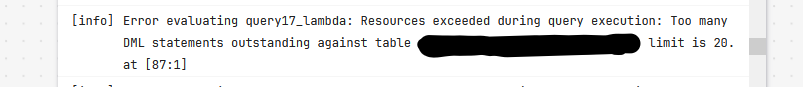

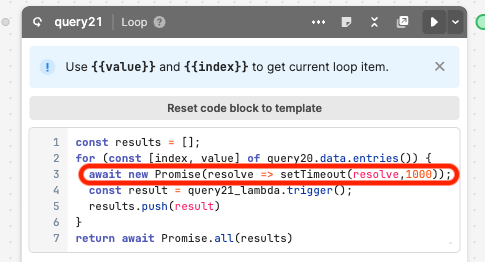

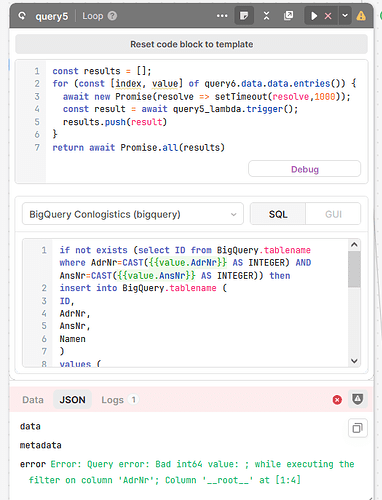

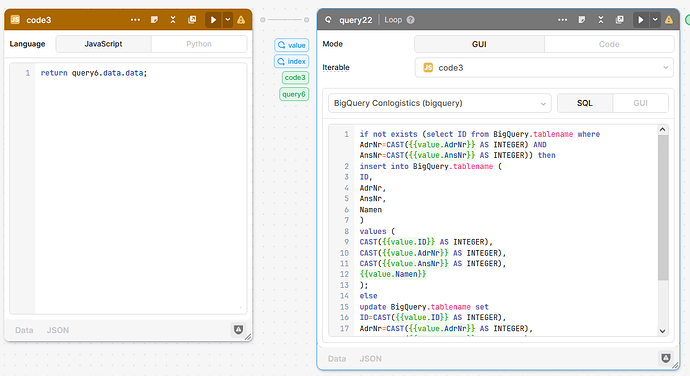

The previous structure of the workflow for this looked like this

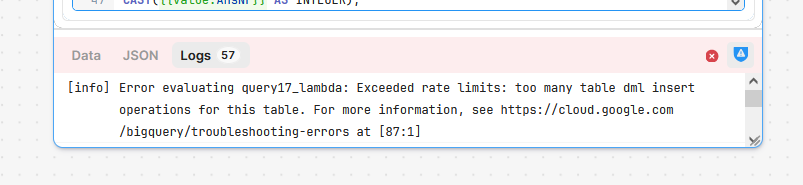

And it worked until It exceeded rate limits with BigQuery, after trying to insert several dozen data records.

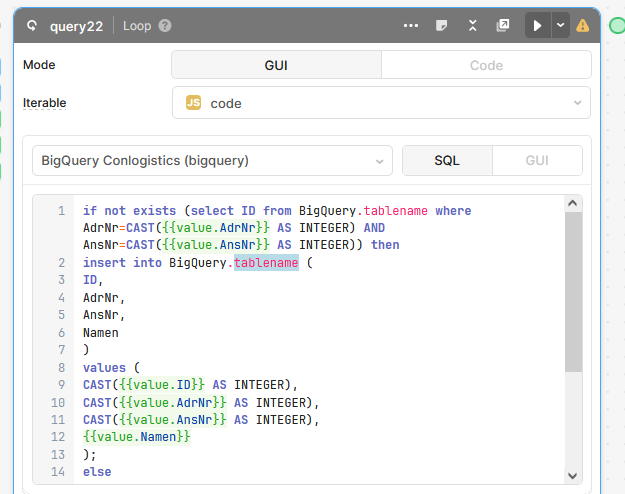

In the new loop I tried to reference the code block and you are correct, it must be "code3" not just "code", but nothing works. It doesn't matter if I try

code3.data.data.entries()

or

code3.data.entries()

I even connected the loop directly to the resource query with the REST request and tried the same things, but my values in this loop are always null or undefinded.