One of our developers accidentally deleted a few rows in our "Timesheets" database. I'd like to see what rows were deleted and manually add them back in. A member on this forum said to post here, and a Retool staff would reach back out to us. This isn't super urgent, but it would be nice to restore within the next two weeks. Thanks!

Hi @Jeffrey_Yu! Thanks for reaching out and for your patience. Unfortunately we can only restore snapshots of RetoolDB within a 7-day rolling window. If it's of any benefit, your audit logs will show a record of the query and any parameters passed into it - most likely ids. This could be helpful if you have a backup in development, for example.

Given the time-sensitive nature of your request, it ideally would have been caught and flagged by internal moderation workflows. We're reviewing ways to better address this type of request going forward, knowing that there is a hard deadline for recovery.

Additionally, we are planning development on a feature that allows customers to manage their own backups. We're well aware that this is a pain point for users like yourself and want to be as proactive as possible in preventing future data loss!

Hi Darren,

Thanks for letting us know. We'll have an internal staff member work on syncing Retool DB with an external software with backups. Do you have any resources on the topic, or guides on how to setup a backup system?

Also, if we ever have an urgent situation where a database is deleted or seriously harmed, is there a faster way to reach out to you so we hit that 7-day window?

Jeff

Of course! We certainly appreciate the understanding. ![]()

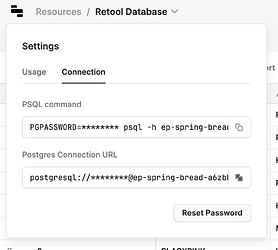

I'm actually really interested in setting up a workflow template that can automate database backups but that project is in early days. In the meantime, it's fairly straightforward to manually create backups via pgAdmin or to automate it with a pg_dump script on a local server. One caveat about the latter is that the local version of postgresql will need to match the version on RetoolDB. For both solutions, you'll need the RetoolDB connection string, which you can find via the Retool interface:

I'd be happy to help out with setting that up if you have any further questions!

As to your second question, we are currently discussing how to best ensure that time-sensitive issues have a clear channel of communication. We're considering a form that would go into a daily queue upon submission, for example. As soon as we have a system in place, though, we'll let you know!

Thanks Darren for the information! I’ll have our internal staff comment on this thread and continue the discussion on setting up these backups.

Hi Darren,

I'm Maydeline, in charge of this project. I'm considering implementing the Google Drive automation now and revisiting the integration in spring 2025, when your team may have developed the new backup workflow. This timing aligns with our plans to enhance our internal database design with features like caching and sharding.

Could you provide insights on how the Google Drive automation would function if we proceed with it now? Additionally, I would appreciate more details about the pg_dump automated script you mentioned.

Thank you for your assistance!

Welcome to the community forum, @Maydeline! I'm happy to help.

Can you describe the Google Drive automation that you're looking to implement? I don't think it was something we discussed previously, unless I'm misunderstanding.

As to pg_dump, the syntax is really quite simple but it has a lot of powerful use cases. The only prerequisite is having the postgresql library installed for whatever Unix operating system you're running. After that, the basic command is just pg_dump postgres://username:password@my_postgres_server/databasename > file_name.sql but you can tweak this basic behavior quite a bit. There's a good explanation here! I'd recommend storing your backups in the .tar format, for example. This link also includes an explanation for automating the process with cron. ![]()

As I noted previously, you can find all the necessary information for constructing the connection string here:

I hope that helps! Don't hesitate to follow up here if you have additional questions.

Thanks Darren for the insights! We decided to do the more crude automatic CSV uploading because it would be faster to implement, but we'll definitely revisit adding a more sophisticated pg_dump next spring!