Hello again forum!

I'm trying to replicate data from a MySQL database to a BigQuery table using a Retool Workflow. I am following the methods discussed in here and here, using the legacy loop block to insert the data in batches. To circumvent DML quota limits of BigQuery I added a 10 second delay after 20 triggers.

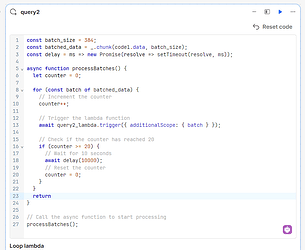

Code:

const batch_size = 384;

const batched_data = _.chunk(code1.data, batch_size);

const delay = ms => new Promise(resolve => setTimeout(resolve, ms));

async function processBatches() {

let counter = 0;

for (const batch of batched_data) {

// Increment the counter

counter++;

// Trigger the lambda function

await query2_lambda.trigger({ additionalScope: { batch } });

// Check if the counter has reached 20

if (counter >= 20) {

// Wait for 10 seconds

await delay(10000);

// Reset the counter

counter = 0;

}

}

return

}

// Call the async function to start processing

processBatches();

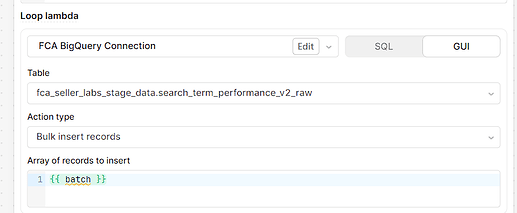

Loop block:

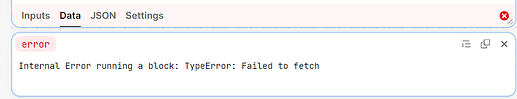

When executing the workflow block by block, the loop block throws this error after a while:

- metadata{} 0 keys

- errorInternal Error running a block: TypeError: Failed to fetch

But when I check my data in BigQuery, it's all inserted, so it seems to be working correctly.

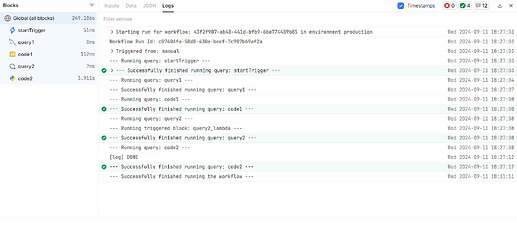

I tried running the whole Workflow to see if the logs of the run had a verbose version of the error, as suggested here but it showed no detailed logs.

Maybe loopv2 block is better suited for this. I'm worried this may cause issues in the future, as I want to scale this workflow further. If you can provide insights, best practices or tips on how to do this it would be greatly appreciated!