I have what seems like a pretty straightfoward workflow with a moderate amount of data that eventually hangs the browser and does not complete.

My workflow grabs about 20k rows from a Google Sheet, performs a loop on that data to rename some of the field names and then does another loop to upsert the data into the default Postgres managed_db.

When I run this indiviudally (via the run icon/button above each step), grabbing the data only takes a few seconds to complete, but then the second loop to rename fields gets stuck with the loading spinner and eventually Chrome tells me that it looks like the page hung and if I wanted to wait or exit it.

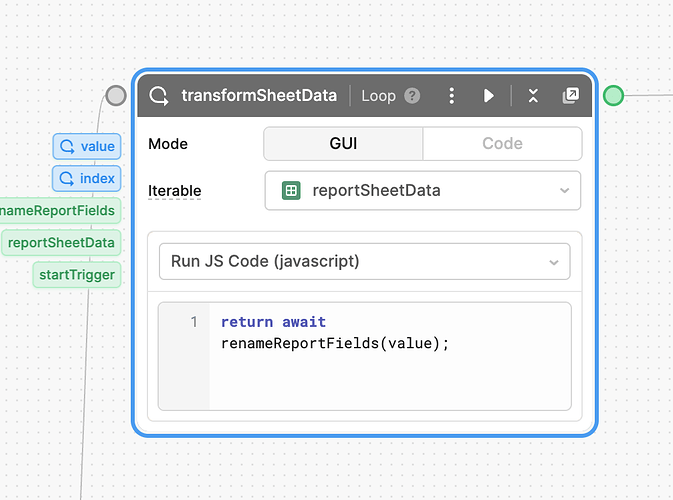

The loop in this step just calls a function:

const columnNameMappings = {

'Bundle ID': 'bundleId',

'Campaign ID': 'campaignId',

'Campaign Name': 'campaignName',

// ... more column names

};

const { Date, ...rest } = row;

return {

Date: moment(Date).format('YYYY-MM-DD'),

...Object.keys(rest)

.reduce((acc, field) => ({

...acc,

[columnNameMappings[field] || field]: row[field]

}),

{})

};

I ran this on a smaller scale table with a few rows and it worked fine. Is 20k rows just too much data to run in the browser? Each row has 12 fields, and when you download the sheet as a CSV, it's 2.8 meg in size.

Presumably when I set this up as an active workflow, it'll run on the Retool backend and might be more successful.