Hello @Sydney_Young!

Sorry for the late response ![]()

Thank you for sharing that test file. I was able to get it into my GCS account and was able to test it and another GLB file I made.

I can confirm that running your script caused the files to increase in size ![]()

Going from 659.7 KB to 901 KB for your file.

And 979.3 KB to 1.3 MB for the file I made to double check against.

Doing some research it seems that converting binary to base64 with bytesToBase64 is likely the culprit. As each set of 3 bytes (24 bits) of binary data is converted into 4 Base64 characters (each 6 bits).

- This adds ~33% overhead because:

- 4/3 = 1.3333 (33% more data).

- Additional padding characters (

=) may slightly increase the size.

I then tried changing the script as it seemed that the query.data.body returns a string that is already base64 encoded and if we could remove this step it should prevent the file bloat.

Using the script

const base64Data = query1.data.body

utils.downloadFile({base64Binary: base64Data}, "newfilename", "glb");

I found that the file size did not change for me and these files worked when importing them into Blender!

Let me know if this works for you, it looks like you tried a similar script with utils.downloadFile(Base64Binary: downloadGLB.data.Base64Data) but I am hoping if you save the query.data.body to a const variable and then pass that in to the util file that it will work as it did for me! ![]()

![]()

![]()

All the files I had run the script to convert the string to binary to base64 all gave me an error when trying to import them into blender so can confirm that behavior is in some way corrupting the files. Except for the ones I downloaded with the script I shared at the top.

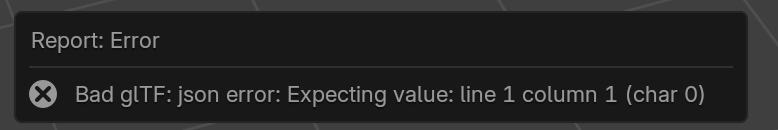

They gave me this error:

Hope this helps and let me know if it works for you!