Hi,

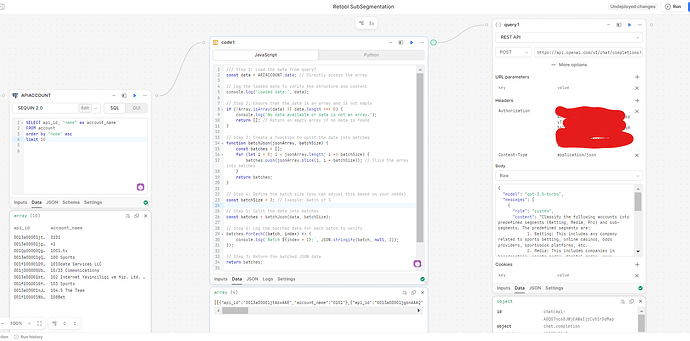

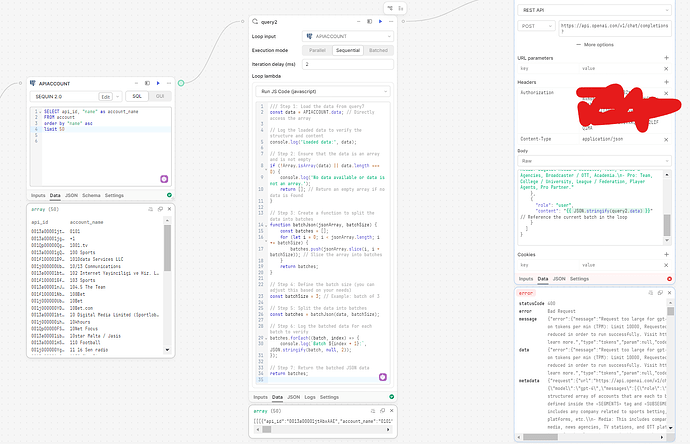

I am wanting to send account data from my postgres instance to my openapi to populate segment sub segment data. The dataset I have is pretty large so I want to do this in batches. I cant get the rest api to iterate through the batches? Any idea what I am doing wrong?

Thanks

This is what I have done -

Step 1 -

SELECT api_id, "name" as account_name

FROM account

limit 50

Step 2 -

Loop sql query batch size 10

Step 3 -

Convert loop to Json

/// Step 1: Load the data from query7

const data = APIACCOUNT.data; // Assuming query7.data is already formatted as an array

// Log the loaded data to verify

console.log('Loaded data:', data);

// Step 2: Transform the data to NDJSON format

const ndjson = data.map(item => JSON.stringify(item)).join('\n');

// Log the transformed NDJSON data to verify

console.log('NDJSON data:', ndjson);

// Step 3: Return the transformed data

return ndjson;

Step 4 -

REST API to OPENAPI as follows

{

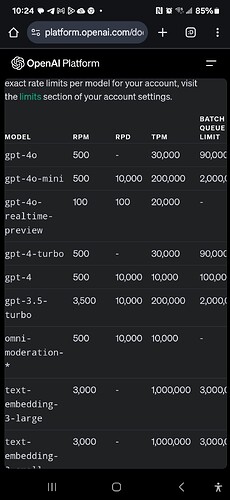

"model": "gpt-3.5-turbo",

"messages": [

{

"role": "system",

"content": "Classify the following accounts into predefined segments (Betting, Media, Pro) and sub-segments. The predefined segments are:

1. Betting: This includes any company related to sports betting, online casinos, odds providers, sportsbook platforms, etc.

2. Media: This includes companies in broadcasting, sports media, digital media, news agencies, TV stations, and OTT platforms.

3. Pro: This is for any company related to professional sports organizations, such as teams, leagues, federations, and stadiums.

The sub-segments under these categories are:

- Betting: Sportsbook, 3rd Party Betting Partner, Fantasy, Gaming & Affiliates, Professional Bettors & Syndicates.

- Media: Digital Media & Websites, Tech, Brands & Agencies, Broadcaster / OTT, Academia.

- Pro: Team, College / University, League / Federation, Player Agents, Pro Partner."

},

{

"role": "user",

"content": "Please classify the following accounts and return the result as a JSON object with keys 'api_id', 'account_name', 'segment', and 'subsegment' for each account. Here are the accounts: {{code1.data}}"

}

]

}