I have a CSV file with 300K+ entries from which I have to upload that data to Firebase Firestore. I am doing this in a recursive method to make the calls again and again.

Now I have the code which works just fine.

const rows = gameStatsFileButton.parsedValue[0]; // This basically gets the number of rows in the document.

// This is a delay function I made

async function delay(ms) {

console.log("delay called for" + ms);

return new Promise(resolve => setTimeout(resolve, ms))

}

//This is the function that triggers the method to upload the data

async function uploadCall(thisData, index) {

console.log("Uploaded", index);

await UploadGameStatsToFirebase.trigger({

additionalScope:{

data:thisData,

},

onSuccess: function(data) {}

});

}

// This is the main Loop function.

// It is called recursively, to loop through the contents of CSV file and call

// the upload method to upload that row.

async function runQuery(i) {

if(i >= rows.length) {

console.log("Finished running all queries");

return;

}

var data = rows[i];

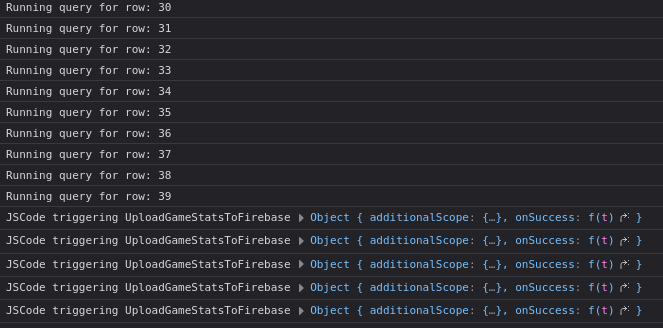

console.log("Running query for row" + I);

await delay(2000); // calling delay method to add a delay of 2 seconds.

uploadCall(data, i);

runQuery(i+1);

}

runQuery(0);

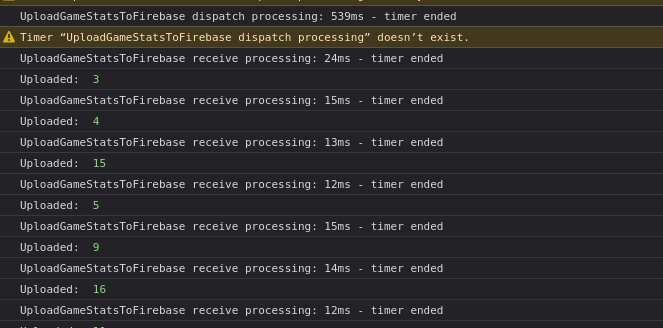

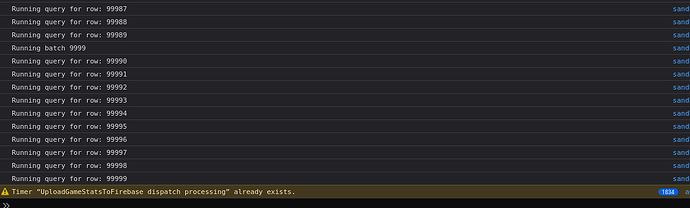

Since the number of rows or entries in the CSV is a lot; it will obviously take some time to upload the data. But with my current implementation, it takes ridiculously long and after a while, it crashes the browser because it overloads the thread (from my understanding at least).

Also, from my understanding; I cannot make this process synchronous at all since each API call takes 2s, meaning it'll take 600k seconds to complete the data. It's not practical at all.

I can only insert one row at a time to Firestore, from the methods I have. What is the efficient and/or industry standard for making large amounts of async API calls?