Funny, you caught me preparing an answer to this --

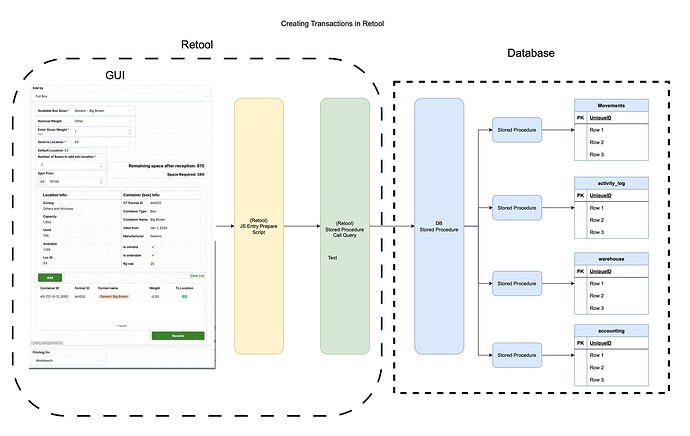

So describe a bit in basic terms I made this chart. (at the bottom)

Essentially from whatever GUI you have; a form, a table or anything, you can create a js Query that will prepare your entry into the database.

Before you can submit it, you'll need to create whatever necessary stored procedures.

In my case, the form in the picture (and chart) essentially hits 4 tables (sometimes 5). Given that a couple of them are very mundane like accounting entries and activity logs, I created a stored procedure for each.

This is how my stored proc looks for the accounting entry.

CREATE OR REPLACE PROCEDURE

proc_NEW_ACCOUNTING_ENTRY(

entry_reference TEXT,

entry_timestamp TIMESTAMPTZ,

amount_kg NUMERIC,

amount_money NUMERIC,

debit_account TEXT,

credit_account TEXT,

action_done_by TEXT,

weighing_session_id NUMERIC DEFAULT NULL,

purchase_order_id NUMERIC DEFAULT NULL,

movement_id NUMERIC DEFAULT NULL,

entry_commentary TEXT DEFAULT NULL)

AS $$

BEGIN

-- Insert accounting entries

INSERT INTO f_powder_accounting (

ws_id,

po_id,

mov_id,

entry_ref,

entry_ts,

mtype,

amount_kg,

amount_money,

account_id,

done_by,

is_valid,

entry_comments

)

VALUES

-- Debit entry

(

weighing_session_id,

purchase_order_id,

movement_id,

entry_reference,

entry_timestamp,

'debit',

amount_kg,

amount_money,

debit_account,

action_done_by,

true,

entry_commentary

),

-- Credit entry

(

weighing_session_id,

purchase_order_id,

movement_id,

entry_reference,

entry_timestamp,

'credit',

-amount_kg,

-amount_money,

credit_account,

action_done_by,

true,

entry_commentary

);

RAISE NOTICE 'All operations completed successfully';

EXCEPTION WHEN OTHERS THEN

-- If any error occurs, raise

RAISE NOTICE 'Error occurred: %', SQLERRM;

RAISE;

END;

$$

LANGUAGE plpgsql

You can naturally pass info as arguments or create variables in any.

Given that I re-use this somewhat often, I can call this procedure from another procedure, a (very) simplified version of that looks like this:

(this contains errors, is missing variables, and a few things, but the idea is there)

CREATE OR REPLACE PROCEDURE proc_FORM_ENTRY(p_data JSONB)

AS $$

DECLARE

v_entry_ts TIMESTAMPTZ;

v_debit_entry_id NUMERIC;

v_credit_entry_id NUMERIC;

v_pct_id TEXT;

v_ct_qty INTEGER;

v_is_new BOOLEAN;

amt_money NUMERIC;

BEGIN

v_entry_ts := (p_data->>'entry_ts')::TIMESTAMPTZ;

amt_money := (p_data->>'amt_money')::NUMERIC;

CALL proc_MOVEMENTS(p_data, amt_kg, amt_money);

-- Accounting

CALL proc_ACCOUNTING(entry_ref, entry_ts, amt_kg, amt_money, debit_acc, credit_acc, done_by)

CALL proc_WAREHOUSE(entry_ref, entry_ts, box_id, amt_kg, amt_money, done_by);

-- Check if new

CASE WHEN v_is_new = TRUE THEN

CALL proc_NEW_BOX(box_data::JSONB)

END;

RAISE NOTICE 'All operations completed successfully';

EXCEPTION WHEN OTHERS THEN

-- If any error occurs, raise

RAISE NOTICE 'Error occurred: %', SQLERRM;

RAISE;

END;

$$

LANGUAGE plpgsql

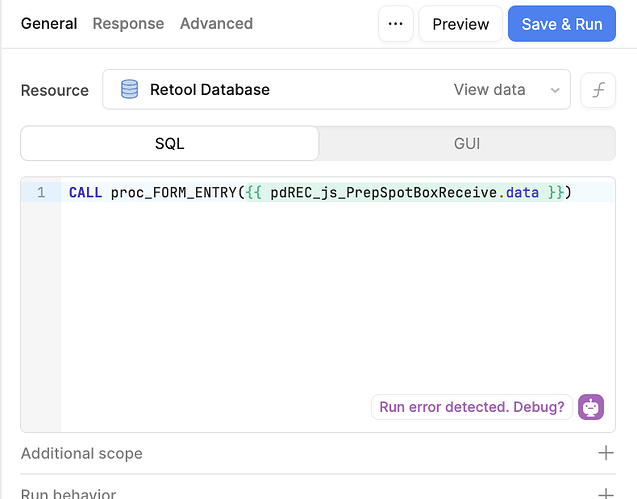

Then in the Retool editor, you would call this "proc_FORM_ENTRY" procedure from a regular SQL query that would look like this:

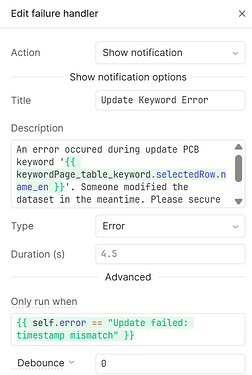

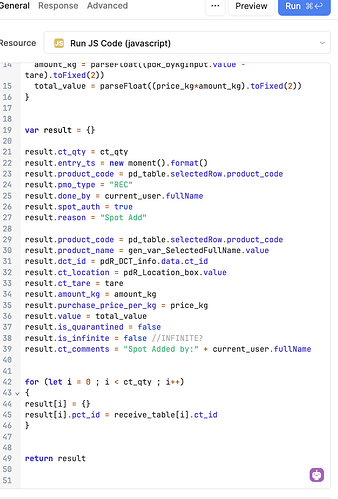

And in turn, that SQL gets executed using an event handler after the initial "form entry prep" JS query that in my case looks a bit like this:

It's a fair bit contrived, but it has worked quite well for me so far and it seems to be the most solid way to guarantee transactions going in full.

Given that you can call other procedures or functions from procedures I have been creating a few key procedures that sit before the table, and those are called from another one that takes the shape of whatever necessary transaction, so for something I just need 2 or 3, I just call those rather than writing a full procedure for each movement that then becomes more codebase to be maintained and serviced. (At the cost of breaking more things if the shape is changed, naturally).

Lastly, here's a diagram with the overview of all this.

Good luck and hope it helps!