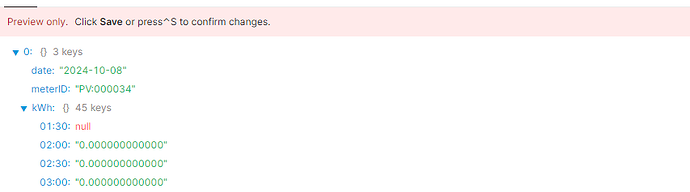

Hi, thanks for the comment. I have been making some progress. The data is utility consumption data in Half Hour increments, and is destined for a .csv file. Each line would be MeterID, Date, Value1, Value2,......Value48 in Base Time (GMT for us) not Daylightlight saving.

My JS is non-existent but my VB is good so learning fast hopefully.

The drop a level turned out to be easy, just needed to remove .kWh from

result[dateKey].kWh[moment(date_time).format('HH:mm')] = consumption_kwh;

I then ran into some issues:

It was using just the date as dateKey so it was overwriting each meter so fabricated dateKey as date+meterId which worked.

const dateKey = moment(date_time).tz("UTC").format('YYYY-MM-DD')+[meterid]

Some DaylightSaving issues, resolved with .tz("UTC")

And sorted the 00:00 issue. With utility data it's 'always' a period ends time stamp. So Today at 00:00 is for consumption yesterday between 23:30 and 00:00 so belongs to yesterday. And today starts with the 00:30 value which runs from 00:00. Some American systems use the abomination that is 24:00 which is today's date but the same as tomorrow's 00:00 (yuck). I deducted a microsecond from all the time stamps in the SQL read of data from DB. Then midnight less a microsecond has yesterdays date. Then just had to drop the first line from each output. (I need the previous value as the data is meter readings, so use lag to get the difference (consumption)

And I think I am there. Got some final testing to do to prove data matches all the way through but initial checks look good.

function transformExportDB() {

const tableData = formatDataAsArray({{ exportDB.data }});

//const firstelement = tableData.shift()

//only gets rid of first midnight value

const transformedData = _.reduce(tableData, (result, row) => {

const { date_time, meterid, consumption_kwh } = row;

const dateKey = moment(date_time).tz("UTC").format('YYYY-MM-DD')+[meterid];

// If statement to get rid of first orphaned value

if (moment(date_time).tz("UTC").format('YYYY-MM-DD') >

moment({{ exportDB.data.date_time[0]}}).tz("UTC").format('YYYY-MM-DD' )) {

if (!result[dateKey]) {

result[dateKey] = { date: moment(date_time).tz("UTC").format('YYYY-MM-DD'), meterID: meterid, };

}

result[dateKey][moment(date_time).tz("UTC").format('HH:mm')] = consumption_kwh;

} else {

console.log(meterid + " - "

+ moment(date_time).tz("UTC").format('YYYY-MM-DD HH:mm:ss') + " - Orphan Line") //Comment of doing nothing

}

return result;

}, {});

return Object.values(transformedData);

}

return transformExportDB();

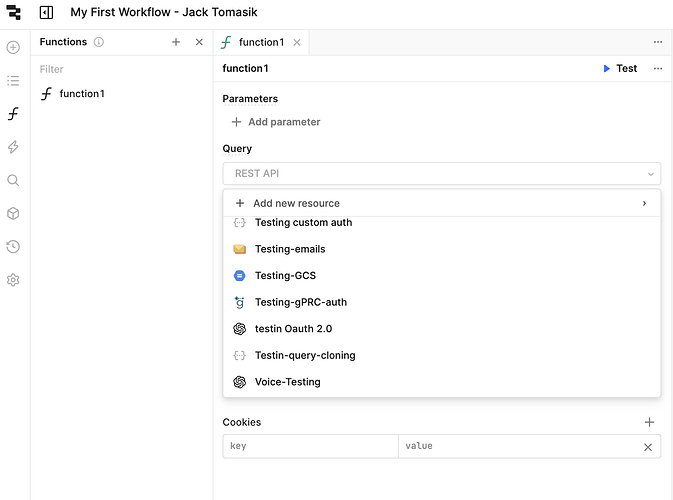

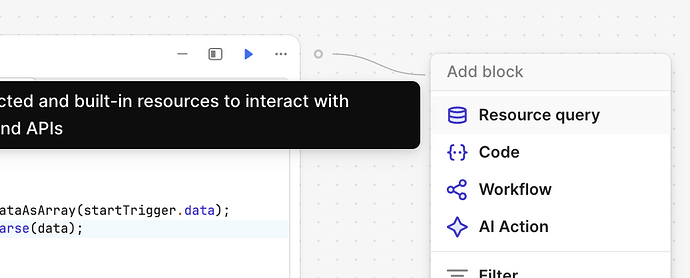

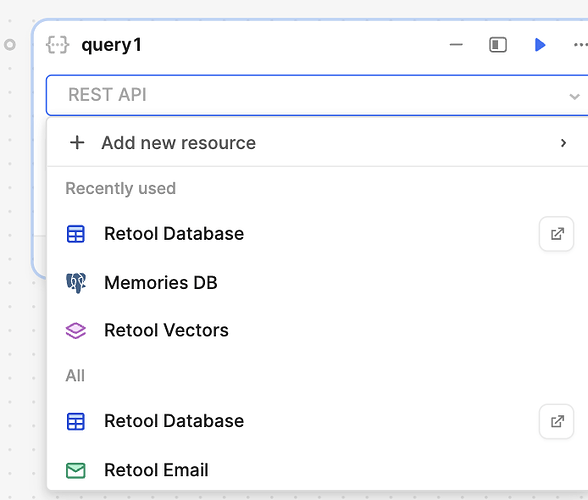

Now just need to work out how the Workflow bit works. It seems I can't run the JS script that calls the API with the relevant meter details on the app to automate it. Do I need to rebuild the JS Script and associated queries that picks up all the meters that are queried via the API and build this in Workflow?