Hey @victoria  ,

,

I am wanting to convert a Bulk Update/Upsert via GUI Mode to a Snowflake Merge Statement in SQL Mode. Wanting to do this conversion so I can get around the performance limitations of the Bulk Update/Upsert in GUI Mode.

My use case is bulk updating a table I have on Snowflake with data uploaded via a CSV file.

Snowflake Merge Doc: MERGE — Snowflake Documentation

My attempt at a conversion syntax:

merge into snowflake.table k

using (select * from {{ formatDataAsArray(upload_file.data) }} ) as b on k.id = b.id

when matched then update set k.column1 = b.column1, k.column2 = b.column2

Or, is there any method like chunking an array of updates?

1 Like

Also looking for solution for the same. Thanks

Sorry for the delay here everyone!

We've been tinkering with this and one solution that a user we spoke with recently found is to use a transient table from the csv data. Then run the merge on the original table and the temp table.

As a solution, I used transient table:

1. CREATE transient table temp (….)

2. Insert data using GUI mode by using hardcoded table name

3. Merge into PUBLIC_DATASETS.DATASET_NAME as target using (SELECT * from PUBLIC_DATASETS.temp) as SOURCE on target.id = source.id when matched then UPDATE set target.COMPANY_NAME = SOURCE.company_name when not matched then insert (id, company_name) values (SOURCE.id, source.company_name)

4. Drop table PUBLIC_DATASETS.temp

The limitation here being is that the table names can't be unique for different Retool sessions.

I also wonder if something like the lodash (a library included in Retool) _.chunk method could help us still use the GUI mode, just broken down into smaller chunks. Let me know if something like this could work!

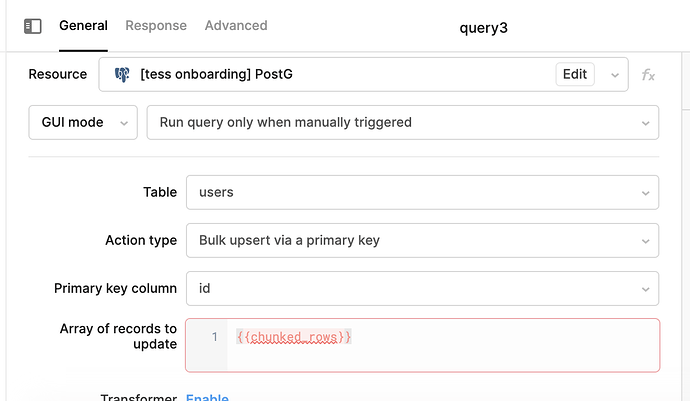

Here's the JS Code I used in a JS Code query type to trigger an update query with chunks of data at a time (instead of one, too big chunk):

const arr = table2.recordUpdates

const chunked_arr = _.chunk(arr, 2)

const query = query3; // your query to trigger for each

const promises = chunked_arr.map((each_arr) => {

return query.trigger({

additionalScope: {

chunked_rows: each_arr

}

})

});

return Promise.all(promises)

And just in case this is still helpful, here's a community post that also goes over the chunk function in a JS query to process large amounts of data in Retool.

![]() ,

,