Hi @Nathan_Franklin,

The chunking process did change! The TLDR is that we expanded the chunking window.

From our engineers: " We recently changed how things were chunked to make chunks way closer to the max size allowed by the AI providers APIs. This means chunks of english language text are usually around 30kb, not 1-2k characters.

If your document is not significantly larger than 30kb, you're only gonna end up with 1 chunk. I'm looking at uploads of 2.5mb files and it's chunked into ~80 pieces"

This should not have any effect on the effectiveness of the model to find the correct solution.

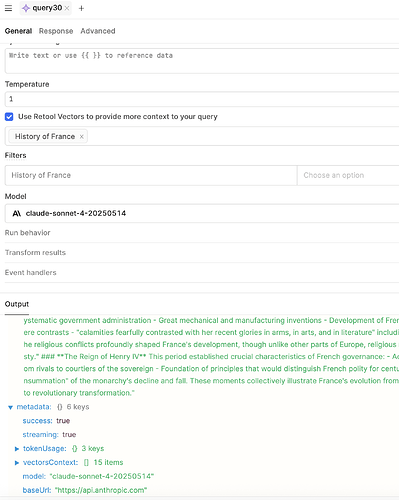

In terms of the original question on the number of documents inside of vectorsContext, I can keep looking into that. Did you change the temperature on the AI Query?

I just tested out a question with the same model as you are using with a single document in a Vector and it gave me 15 items inside of vectorsContext so I am very surprised you are getting so few items. Maybe the question is extremely specific and the agent is not able to find a lot of context? ![]()