My goal:

Create a smooth workflow that generates text with AI blocks, cleans data in Python, and stores results in our database.

The issue:

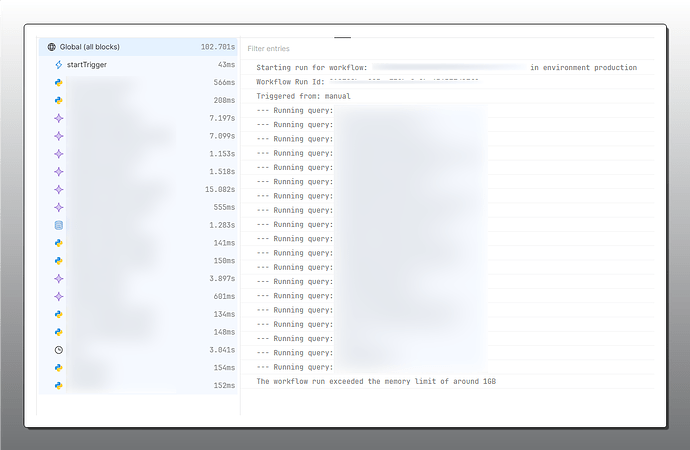

The workflow keeps crashing with The workflow run exceeded the memory limit of around 1GB errors after a few steps (screenshot).

What I've tried:

- Reduced batch sizes in AI blocks

- Implemented chunked processing in Python (Pandas)

- Manually cleared intermediate variables

Additional context:

- Using Retool Cloud (no self-hosted option)

- Working as a solo developer

Looking for practical solutions to either:

- Reduce memory usage in AI/Python steps, or

- Restructure the workflow to process data more efficiently

- Any advice on specific AI models?

Would especially appreciate examples from others who've balanced AI generation with memory constraints!

Cheers,