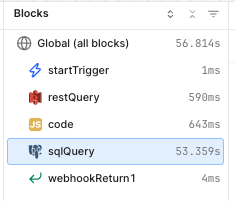

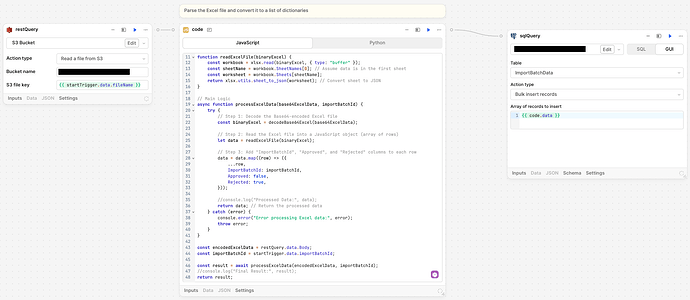

I have a retool workflow where a javascript code block is fetching an excel file from an S3 bucket and reading it into an array for a Postgres Bulk Insert query to execute using the {{ code.data }} value.

I am seeing that when I have an excel file with 3k+ rows, the bulk insert is taking over 50 seconds. I would like to improve this speed.

Is there a more performant way to bulk insert this volume of data to my Postgres db from an excel file?

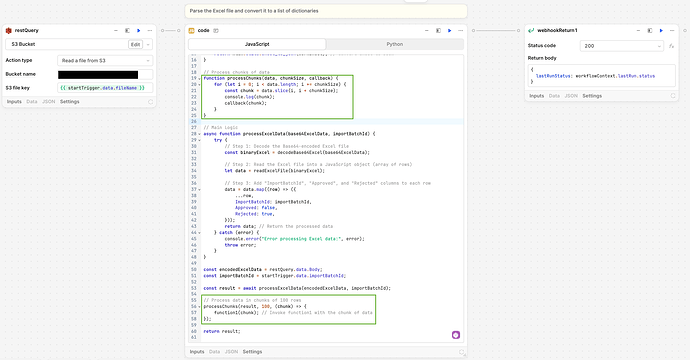

I found this post that explained how you can add a function in a workflow that is a resource query (bulk insert to Postgres for my use case) and call it in a loop over chunks of data.

New function in the workflow:

Updated Javascript code block to slice chunks (and removed the postgres insert code block)

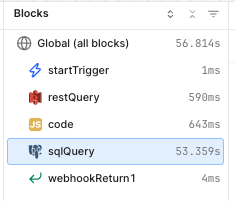

My bulk insert is now completing in less than 10 secs!

Wow! Taking the runtime down from 50 seconds to 10 is awesome! Thank you for sharing!