Hi! I am on the newer side to Retool, so apologies if I am making a silly mistake here ![]() I am attempting to bulk upsert data from a code block (I am chunking this data into 500 rows because there are ~6000 rows I am attempting upsert on average) into a retool db. This works perfectly fine on the first go, when my retool db is completely empty, but when I attempt to re-run to test for updating, I get the following error:

I am attempting to bulk upsert data from a code block (I am chunking this data into 500 rows because there are ~6000 rows I am attempting upsert on average) into a retool db. This works perfectly fine on the first go, when my retool db is completely empty, but when I attempt to re-run to test for updating, I get the following error:

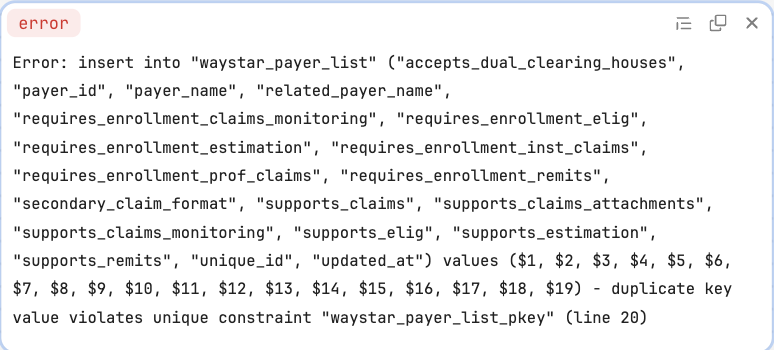

I see that the error shows the query:

insert into "waystar_payer_list" ("accepts_dual_clearing_houses", "payer_id", "payer_name", "related_payer_name", "requires_enrollment_claims_monitoring", "requires_enrollment_elig", "requires_enrollment_estimation", "requires_enrollment_inst_claims", "requires_enrollment_prof_claims", "requires_enrollment_remits", "secondary_claim_format", "supports_claims", "supports_claims_attachments", "supports_claims_monitoring", "supports_elig", "supports_estimation", "supports_remits", "unique_id", "updated_at") values ($1, $2, $3, $4, $5, $6, $7, $8, $9, $10, $11, $12, $13, $14, $15, $16, $17, $18, $19)

but I expected something along the lines of this:

INSERT INTO waystar_payer_list (

unique_id, payer_id, payer_name, related_payer_name, requires_enrollment_prof_claims, requires_enrollment_inst_claims,

secondary_claim_format, requires_enrollment_remits, requires_enrollment_elig, requires_enrollment_claims_monitoring,

accepts_dual_clearing_houses, supports_claims_attachments, requires_enrollment_estimation, supports_claims,

supports_remits, supports_elig, supports_claims_monitoring, supports_estimation, updated_at

)

VALUES ($1, $2, $3, $4, $5, $6, $7, $8, $9, $10, $11, $12, $13, $14, $15, $16, $17, $18, $19)

ON CONFLICT (unique_id)

DO UPDATE SET

payer_id = EXCLUDED.payer_id,

payer_name = EXCLUDED.payer_name,

related_payer_name = EXCLUDED.related_payer_name,

requires_enrollment_prof_claims = EXCLUDED.requires_enrollment_prof_claims,

requires_enrollment_inst_claims = EXCLUDED.requires_enrollment_inst_claims,

secondary_claim_format = EXCLUDED.secondary_claim_format,

requires_enrollment_remits = EXCLUDED.requires_enrollment_remits,

requires_enrollment_elig = EXCLUDED.requires_enrollment_elig,

requires_enrollment_claims_monitoring = EXCLUDED.requires_enrollment_claims_monitoring,

accepts_dual_clearing_houses = EXCLUDED.accepts_dual_clearing_houses,

supports_claims_attachments = EXCLUDED.supports_claims_attachments,

requires_enrollment_estimation = EXCLUDED.requires_enrollment_estimation,

supports_claims = EXCLUDED.supports_claims,

supports_remits = EXCLUDED.supports_remits,

supports_elig = EXCLUDED.supports_elig,

supports_claims_monitoring = EXCLUDED.supports_claims_monitoring,

supports_estimation = EXCLUDED.supports_estimation,

updated_at = EXCLUDED.updated_at;

Am I using this upsert functionality incorrectly? Happy to provide more context if needed!