We're curious if RT connects to the DB for each tab I have open or has one connection open on the backend for all our apps?

Retool will create new connections for each instance of an app that is open, so if you the app open many times (or if many users have the same app opened), then this scenario would lead to many connections being opened. We do pool connections in some cases, but depending on the resource type and how it gets routed in our backend, one user could have several connections open. All these users will be connected to your database using the same DB user that is setup in your Retool account. Are these multiple connections causing issues for you?

I'm currently running into issues with multiple connections. Aside from new connections for each instance of an app open... does Retool also create new connections for each resource query that is part of an app?

If so, what would be the best way to run multiple queries in succession without creating multiple connections?

Hi @bernardmma !

Due to our backend routing, it is possible that requests could be potentially sent from a different connection or connection pool. If you're trying to keep the connections to an absolute minimum, I'd recommend putting a connection pooler in front of your database so that you can manage and limit the connections. PgBouncer for Postgres is an example of this!

Mine retool app is creating a new connection every time when we run the query(PostgreSQL). As a result, I am facing too many connection for the role error. Retool also not releasing the idle connection as well.

I couldn't find any settings in the resource.

Can yo help me to sort out the issue?

I am running into the same issue of too many connections on simple submissions of only 50 records or so and I am the only one using the app.

This makes Retool a rather useless app if some extremely lowkey data handling requires some advanced changes on the DB itself. Wasn't the purpose of Retool to not have to do that kind of stuff???

It also maxes out connections when running a Promises trigger on an update record. e.g. say you can't perform a bulk update (because of a composite primary key) and need to do it row by row. If your table has more then 100 rows, or even if you run it multiple times on one table, it quickly saturates the 100 connections allowed by GCP. This is a problem.

Has there been any new development on this? I am also running into the problem of every tab in my retool app running out of available connections. I'm using a cloud based database so I do not have the option of installing a connection pooler, and I also have no idea what level of service I would need from the database host because I have no way of knowing how many connections retool is creating. Do I need 10 concurrent connections? 20? 500? All I can tell for sure is it's more than 5.

Hi all!

We are currently tracking this issue, but we don't have an update or timeline on when an improvement will be shipped.

This previous message is still true!

Retool will create new connections for each instance of an app that is open, so if you the app open many times (or if many users have the same app opened), then this scenario would lead to many connections being opened. We do pool connections in some cases, but depending on the resource type and how it gets routed in our backend, one user could have several connections open. All these users will be connected to your database using the same DB user that is set up in your Retool account.

Due to the scale of our deployment, during periods of high request volume to your resource (like having many users accessing the same application, or having an application that runs many queries simultaneously to the same resource), several connections will be made to your database. In these high-volume scenarios, the upper bound of connections will be < 300.

If you're not able to configure the number of connections made to your database using a connection pooler, you may also be able to utilize Query Caching in order to reduce the number of connections. Another option would be to use Self Hosted Retool, where the smaller infrastructure would make fewer connections to your database.

@ben we self-host retool and have a small pool of users (around 60), our RDS instance can take up to 900 simultaneous connections, in one month retool created (and never dropped!) almost 700 connections... restart the instance, drop connections, we're good again, but this is insane, why wouldnt it drop database connections?

I know I am answering on a post that is more than an year old, is this behaviour still true?

I could put a pooler in front of it but I shouldnt need to add one more thing to manage to solve this.

@fernando-b we've run into this issue as well over the last couple of months. We're self-hosted and have around 80-90 users with a postgres instance that can have up to 500 max connections. The active connections continue to grow each day and it never drops connections requiring us to restart the instance to clear the active connections before we hit the max connections allowed.

We have an open issue with Retool support and trying to work through it with them but curious if you've solved this problem.

Hi @stevenh , yes we did solve it.

environment variables all the way ![]()

# The maximum time, in milliseconds, that pool will try to get connection before throwing error. retool had it set to 100.000ms = 100s = 1.5~m

SEQUELIZE_ACQUIRE=3000

# The maximum time, in milliseconds, that a connection can be idle before being released. retool had it set to 100.000ms = 100s = 1.5~m

SEQUELIZE_IDLE=2000

# The time interval, in milliseconds, after which sequelize-pool will remove idle connections. retool had it set to 1.000.000ms = 1000s = 17~m

SEQUELIZE_EVICT=3000

# Maximum number of connections in pool. Default is 5, retool did not have it set

SEQUELIZE_MAX=100

# The number of times to use a connection before closing and replacing it. Default is Infinity, retool had it set to 1.000.000

SEQUELIZE_MAX_USES=1000

This has kept our connections at ~100.

Hi @Sebastien,

It looks like you may have a Retool Cloud account. Cloud users cannot set environment variables.

If you have a self-hosted instance, setting the env vars will depend on your deployment type, but it's often something you define in your docker.env file (as described here).

I have been evaluating Retool (cloud version). Today I began going through a dead-simple Retool/Redis tutorial. It has a Redis-stored JSON array object with 15 employee names, and 3 other attributes. It has add employee, update employee and delete employee buttons. In all, this simple page uses 23 connections loading the page, and when I hit "refresh", it maxes out my 30 max connections and will not finish the page refresh. Is there really no solution? I am wondering how this can be of use to anybody. Please let us know. Thank you.

Hi @David123,

Thanks for reaching out! Could you share a bit more info about your app so that we can look into it?

How many queries are running on page load? Does the app crash?

Does the app already have end users or are you still testing your use case?

Hi Tess! Thanks for responding. One query running on one page that has only one table on it. The connections is still a problem, but if I wait a few minutes, the connections free up. I have a much bigger problem. I’ll explain it, and hopefully you can advise me. I’ll keep it super simple:

clientkey1:

I issue the command, “Keys *”

Redis correctly returns the list of keys, each key name representing a client having a number of attributes (e.g. "Company", "City Street", etc.)

table1:

Two columns:

Company Key (e.g. “ACME:123”) FORMAT: { }JSON | VALUE :{{clientkey1.data[i]}}

Company Name (e.g., “ACME Inc.”).

The Company Key column is correctly populated. I cannot figure out how to get the “Company” value into the second column.

Would you please provide guidance?

Or anybody else?

Thanks to all!

Hi @David123 This second issue sounds like you might need to add a mapped value to the column. For both issues it may help to see some app and error screenshots

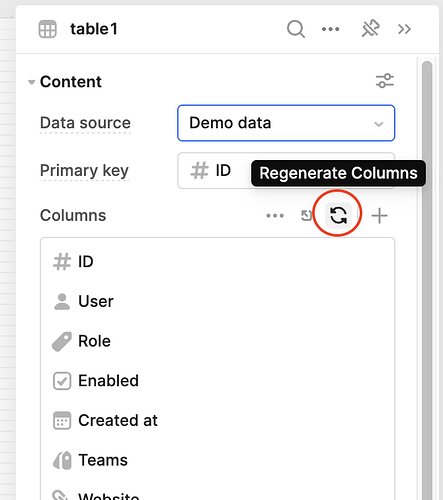

If the keys are changing dynamically, you'll either need to "Regenerate columns" from the table inspector:

or use dynamic column settings

3 posts were split to a new topic: Slow Oracle Database queries