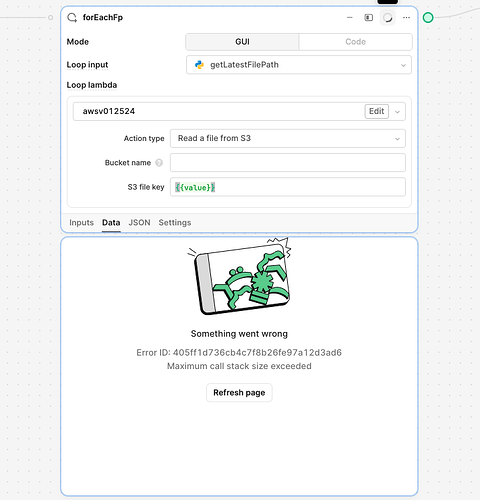

One of our workflows are downloading huge s3 files and processing these files in a loop, but for random reason it stops working, with an error

.

It seems like out of memory issues, how can we get the memory increased?

Our retool project url: https://athenaxcorp.retool.com/

Hi there! It sounds like you are having some trouble with memory. Each workflow has memory and rate limits. If a workflow exceeds said limits, it can fail. Some alternatives you can try are using separate workflows if possible, or potentially you can try to paginate the result of your query and work with a portion of the data at a time.