Hello folks!

Just wanted to share some findings and advice to help users decide which of the AI powered chat tools to use ![]()

I have seen several users working with the older, and less feature rich LLM Chat component and trying to add functionality that the newer Agent Chat feature is much more well equipped to support.

So I will be going over a side by side comparison of the two, giving some examples of when each is best and how to get the most out of whichever option you choose!

LLM Chat

This is essentially a direct text-to-model tool that will allow you to communicate via AI Query.

Where you can pick your model from the configuration panel and ask fairly general questions that you would ask a model from their native website. You can augment this by giving the model Retool Vectors to give it more specific information to reference.

By adding in Retool Vectors to the query, you can give the models more specific data to pull from. Currently, Retool Vectors must be manually added either using GUI from the vectors page or can be uploaded and updated via a query, so this is best utilized for specific information that is static.

In the Retool AI query, you can update the Input , Message history , and System Prompt to reference components and queries in the app, which will be very dynamic. Otherwise, the AI does not have access to the contents of the app.

Read more about it here on the docs!

Agent Chat

Agents are a massive upgrade in terms of capabilities, tools and accessible context.

Agents come with a variety of preset templates for common use cases. But a huge factor for custom builds in the Configuration Assistant which can take user text prompts and help to generate custom tools or find/configure the out-of-the-box tools that Agents come with.

The most powerful tools is the workflows tool, which gives the agent the ability to run workflows that can have any type of Resource Query Block that you could set up in a Workflow.

This allows the agent to draw in data from a database via query instead of needing to pass in {{table1.data}} to each and every query run in a chat component.

This also allows for the agent to grab exactly the data it needs instead of having to context-thread in all the components in your app that an Agent's model would need.

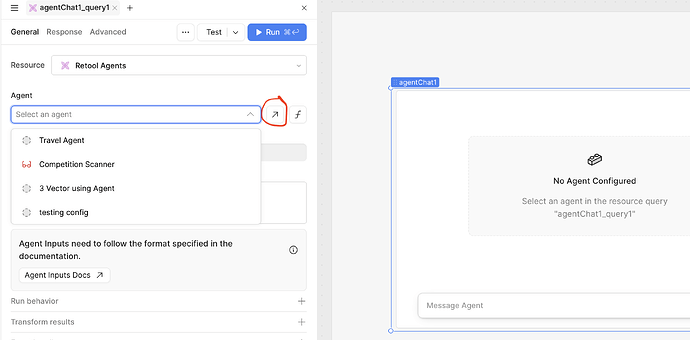

When setting up the Agent Chat component, you will be prompted to pick your agent.

If you don't have any agent configured yet, you can click on the up right diagonal arrow button to take you to the agent setup page.

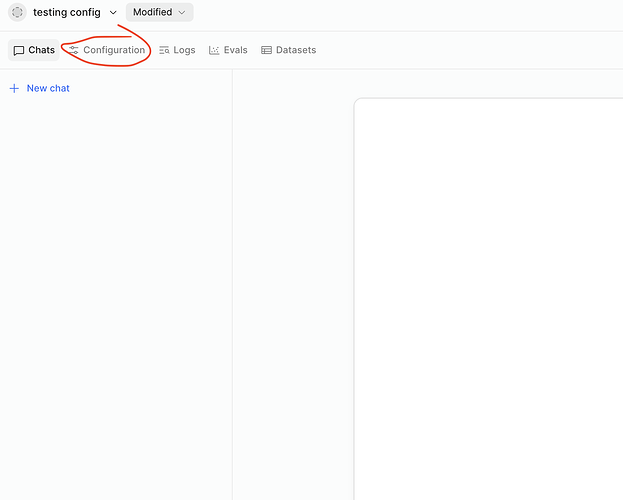

It will default to taking you to the Chats tab but this won't be super useful just yet.

You will be doing most of the set up in the Configuration tab.

This page is a mirror of the 'in app' chat component but it does have a 'History' tab similar to ChatGPT and other model-chat interfaces.

We are currently working on mirroring the chat history over to the 'in app' Agent Chat so stay tuned for updates on that ![]()

And this is where you will find the Configuration Assistant chat window on the right side of this Configuration Tab!

From there, you can wire up your agent with the model of your choice, the tools to grab context from the internet and from Resources that are queries in Workflows and Retool Vectors as well.

The Agent Queries will soon be given the ability to take in frontend app component context via the much loved {{ }} but that is still in the works. Some users have found work arounds to get some app context passed, but that will have much better native support coming out soon!

That is one of the major differences between the two, LLM chat queries can take frontend component context in a much easier fashion, whereas Agent chats will soon have that, but can grab information from the web, resources and anything else it can get with its tools/workflows!

Another major difference is everyone's favorite, Pricing ![]()

We have extended details on our docs here for Agents billing.

For Assist billing, I believe docs are in the works. Will update this post with that soon.

The two biggest factors being which model you chose to use and how many actions are being taken by an AI model. Using LLM chat it will be tokens in and tokens out, using Agents you will have to account for the various tools and steps that are being taken by the Agent to execute a task.

We currently have a feature request for greater metrics for tracking how much different users in an org are using Agents and I believe more details into the "pricing per call" and I will update this with further details from the metrics team.

I have also recently learned that all plans that are on cloud, have a $50 monthly allowance. Which can be rate limiting of Retool's enterprise keys once maxed out(unless users bring their own).

That is because these orgs are using Retool's enterprise AI model keys (which is default included when you select a model) and thus have a max $50 monthly allowance.

For example:

llama-4-scout is $2.50/hr. So that would result in 25 hours of free runtime.

Where as a more expensive model such as claude-4-opus is $175.00/hr.