Hey @ryo!

The 40MB upload limit is fixed for Cloud orgs but you can get around it by sending your request directly from the browser and bypassing the Retool backend. This is slightly less secure since your requests will be coming from a sandboxed iframe and therefore have a null CORS origin.

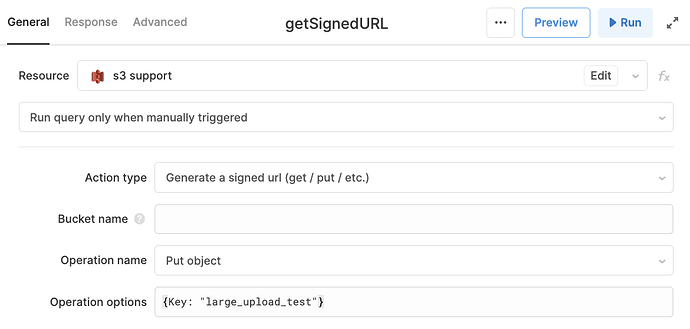

That being said, if you're looking to upload to S3 you can generate a signed url for uploads using the S3 integration:

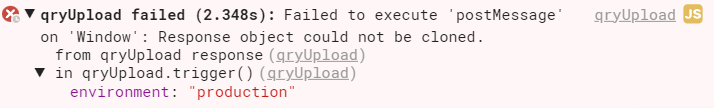

From there, you can use any one of the file upload components and the following JS query to upload your file:

const fileBuffer = Uint8Array.from(atob(fileButton1.value[0]), c => c.charCodeAt(0));

const request = new Request(getSignedURL.data.signedUrl, {

method: "PUT",

headers: {

"Content-Type": fileButton1.files[0].type,

},

body: fileBuffer

});

return fetch(request);

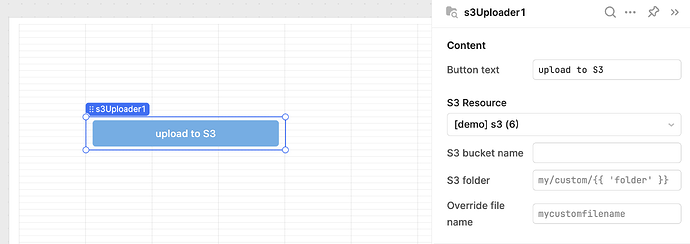

Alternatively, if you'd also like to avoid going through the Retool frontend you can use a custom component with a file input element to handle the upload. Here's a super bare-bones example of what that could look like:

<input type="file" id="file-input"/>

<button id="upload-button">Upload</button>

<div id="log"></div>

<script type="text/babel">

const fileInput = document.getElementById("file-input");

const uploadButton = document.getElementById("upload-button");

const log = document.getElementById("log");

const reader = new FileReader();

let uploader;

window.Retool.subscribe((model) => uploader = uploadData.bind(null, model.signedUrl));

fileInput.addEventListener("change", () => reader.readAsArrayBuffer(fileInput.files[0]), false);

uploadButton.addEventListener("click", () => {

uploader(reader.result, fileInput.files[0].type);

log.innerHTML = "uploading...";

});

async function uploadData (url, data, type){

const request = new Request(url, {

method: "PUT",

headers: {

"Content-Type": type,

},

body: data,

});

const response = await fetch(request);

log.innerHTML = "done";

}

</script>

With the following as the model for the custom component:

{

signedUrl: {{getSignedURL.data.signedUrl}},

}

Let me know if that works or if it raises any questions!