![]() looking for expertise and guidance!

looking for expertise and guidance!

We've built a portal for external partners to submit/update mySQL data rows via a form. We're now looking to give them the ability to use a CSV file to insert several rows in the table.

I've followed this tutorial that works well: CSV to Database, and I've been able to get some of the columns in the table to lookup from other columns (e.g. returning an id based on a name) thanks to this community post

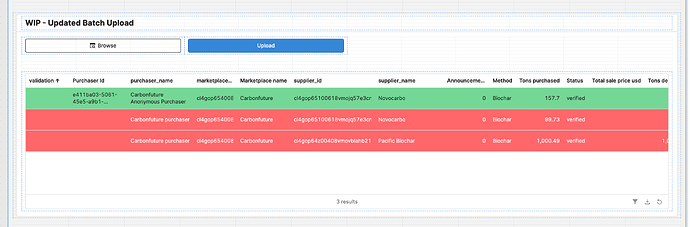

And I'm able to get some visual data validation (e.g. if the id isn't found, highlight in red):

The ideal workflow I'm envisioning would be:

- Once the file is selected/parsed, the user can update the data in the table, and when they change a name, it looks up the corresponding id again

- For each row, there would be a column with a data validation prompt based on certain criteria of data from the row (e.g. if the id isn't found, "id not found", if the date is not correctly formatted, "id not found, date incorrectly formatted", etc.)

- Once everything is green, users can click one button to upload all the rows in the table (instead of doing it row by row like in this tutorial)

Question: I'm stuck at the first step and wondering if the structure I'm putting in place (table scripts/transformers and one query to insert data rows) could lead somewhere or if another route would be more efficient (e.g. API calls like this doc, or something I haven't thought of)?

Any documentation, tutorials or guidance you can provide to get on the right path would be much appreciated ![]()