Just upgraded to 3.253.1 on-prem - the whole code executor thing came as a surprise - maybe we have not read the release notes careful enough. But now our workflows are no longer running ![]()

I just tried to get a code executor container running. There is no real documentation available on how to do that on an existing system after an upgrade. So I copied the relevant changes from the default docker-compose [1] file over to our setup and restarted everything.

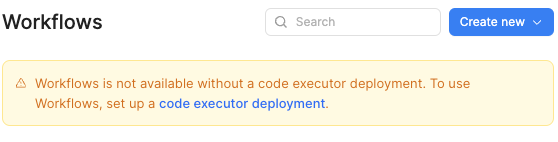

Still I get the warning:

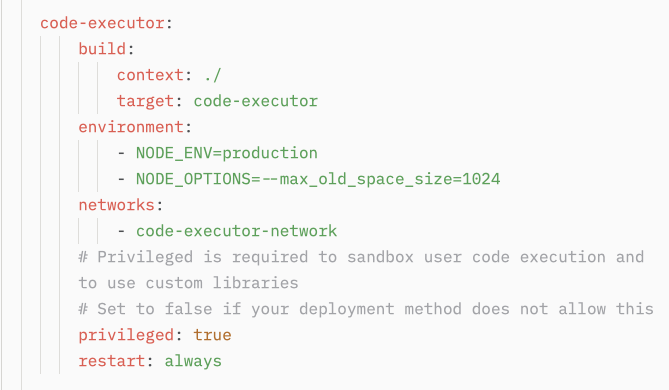

Thats the entry in docker-compose.yml

Whats missing? Where is the documentation to get code executor running?

[1] retool-onpremise/compose.yaml at master · tryretool/retool-onpremise · GitHub

Here is also our current docker-compose file:

# Use as is if using self-managed Temporal cluster deployed alongside Retool

# Compare other deployment options here: https://docs.retool/self-hosted/concepts/temporal#compare-options

include:

- temporal.yaml

services:

api:

build:

context: ./

dockerfile: Dockerfile

env_file: ./docker.env

environment:

- SERVICE_TYPE=MAIN_BACKEND

- DB_CONNECTOR_HOST=http://db-connector

- DB_CONNECTOR_PORT=3002

- DB_SSH_CONNECTOR_HOST=http://db-ssh-connector

- DB_SSH_CONNECTOR_PORT=3002

- WORKFLOW_TEMPORAL_CLUSTER_FRONTEND_HOST=temporal

- WORKFLOW_TEMPORAL_CLUSTER_FRONTEND_PORT=7233

- WORKFLOW_BACKEND_HOST=http://api:3000

networks:

- frontend-network

- backend-network

- db-connector-network

- db-ssh-connector-network

- workflows-network

- code-executor-network

depends_on:

- postgres

- retooldb-postgres

- db-connector

- db-ssh-connector

- jobs-runner

- workflows-worker

command: bash -c "./docker_scripts/wait-for-it.sh postgres:5432; ./docker_scripts/start_api.sh"

links:

- postgres

ports:

- "3000:3000"

restart: on-failure

volumes:

- ./keys:/root/.ssh

- ssh:/retool_backend/autogen_ssh_keys

- ./retool:/usr/local/retool-git-repo

- ${BOOTSTRAP_SOURCE:-./retool}:/usr/local/retool-repo

- ./protos:/retool_backend/protos

jobs-runner:

build:

context: ./

dockerfile: Dockerfile

env_file: ./docker.env

environment:

- SERVICE_TYPE=JOBS_RUNNER

networks:

- backend-network

depends_on:

- postgres

command: bash -c "chmod -R +x ./docker_scripts; sync; ./docker_scripts/wait-for-it.sh postgres:5432; ./docker_scripts/start_api.sh"

links:

- postgres

volumes:

- ./keys:/root/.ssh

db-connector:

build:

context: ./

dockerfile: Dockerfile

env_file: ./docker.env

environment:

- SERVICE_TYPE=DB_CONNECTOR_SERVICE

- DBCONNECTOR_POSTGRES_POOL_MAX_SIZE=100

- DBCONNECTOR_QUERY_TIMEOUT_MS=120000

networks:

- db-connector-network

restart: on-failure

volumes:

- ./protos:/retool_backend/protos

db-ssh-connector:

build:

context: ./

dockerfile: Dockerfile

command: bash -c "./docker_scripts/generate_key_pair.sh; ./docker_scripts/start_api.sh"

env_file: ./docker.env

environment:

- SERVICE_TYPE=DB_SSH_CONNECTOR_SERVICE

- DBCONNECTOR_POSTGRES_POOL_MAX_SIZE=100

- DBCONNECTOR_QUERY_TIMEOUT_MS=120000

networks:

- db-ssh-connector-network

volumes:

- ssh:/retool_backend/autogen_ssh_keys

- ./keys:/retool_backend/keys

- ./protos:/retool_backend/protos

restart: on-failure

workflows-worker:

build:

context: ./

dockerfile: Dockerfile

command: bash -c "./docker_scripts/wait-for-it.sh postgres:5432; ./docker_scripts/start_api.sh"

env_file: ./docker.env

depends_on:

- temporal

environment:

- SERVICE_TYPE=WORKFLOW_TEMPORAL_WORKER

- NODE_OPTIONS=--max_old_space_size=1024

- DISABLE_DATABASE_MIGRATIONS=true

- WORKFLOW_TEMPORAL_CLUSTER_FRONTEND_HOST=temporal

- WORKFLOW_TEMPORAL_CLUSTER_FRONTEND_PORT=7233

- WORKFLOW_BACKEND_HOST=http://api:3000

networks:

- backend-network

- db-connector-network

- workflows-network

- code-executor-network

restart: on-failure

code-executor:

build:

context: ./

target: code-executor

environment:

- NODE_ENV=production

- NODE_OPTIONS=--max_old_space_size=1024

networks:

- code-executor-network

# Privileged is required to sandbox user code execution and to use custom libraries

# Set to false if your deployment method does not allow this

privileged: true

restart: always

# Retool's storage database. See these docs to migrate to an externally hosted database: https://docs.retool.com/docs/configuring-retools-storage-database

postgres:

image: "postgres:11.13"

env_file: docker.env

networks:

- backend-network

- db-connector-network

- temporal-network

volumes:

- data:/var/lib/postgresql/data

ports:

- "5443:5432"

retooldb-postgres:

image: "postgres:14.3"

env_file: retooldb.env

networks:

- backend-network

- db-connector-network

volumes:

- retooldb-data:/var/lib/postgresql/data

# Not required, but leave this container to use nginx for handling the frontend & SSL certification

https-portal:

image: tryretool/https-portal:latest

ports:

- "80:80"

- "443:443"

links:

- api

restart: always

env_file: ./docker.env

environment:

STAGE: "production" # <- Change 'local' to 'production' to use a LetsEncrypt signed SSL cert

CLIENT_MAX_BODY_SIZE: 40M

KEEPALIVE_TIMEOUT: 605

PROXY_CONNECT_TIMEOUT: 600

PROXY_SEND_TIMEOUT: 600

PROXY_READ_TIMEOUT: 600

networks:

- frontend-network

networks:

frontend-network:

backend-network:

workflows-network:

db-connector-network:

db-ssh-connector-network:

#temporal-network:

code-executor-network:

volumes:

ssh:

data:

retooldb-data: