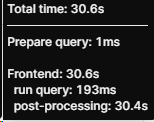

I am using the legacy table rendering 1200 rows, 12 columns from a query json source. Upon refresh or reload of the page, I get these stats which are quite unexpected:

I have client-side pagination (the only one working with BigQuery) but there is only one component, that table, to update. So I was expecting more like 1 or 2 sec max.

Any clue on what may be wrong ?

The legacy table component cannot handle more than a few hundred rows it seems. BigQuery does support limit/offset pagination but this means incurring charges at every query as the SQL changes. Also, it doesn't leverage the cache either (on both retool and BQ sides).

So I found a nice workaround, using slice(). Here how I implemented it in my "final" JSON query:

select * from {{invoices.data.slice(table1.paginationOffset, table1.paginationOffset + table1.pageSize)}} as a left join {{actions.data}} as b on a.key=b.key

Post-processing shows 33ms now... 1000x faster.

To be complete, I have extended the concept to sorting.

Because not a one-liner, I have reversed to the original query and used the following transformer to handle both pagination and sorting:

let out = data;

if ({{table1.sort}}) {out = _.sortBy(data, {{table1.sortedColumn}})};

if ({{table1.sortedDesc}}) {out = out.reverse()};

return out.slice({{table1.paginationOffset}}, {{table1.paginationOffset + table1.pageSize}})