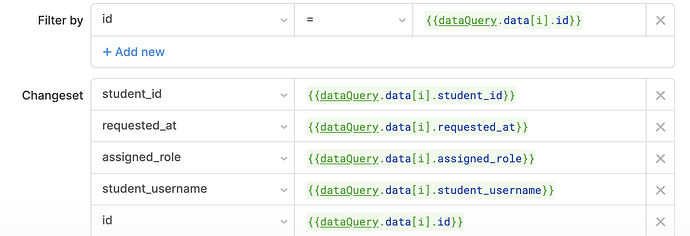

Thanks, Byron. This is what I ended up doing and it worked. Thanks @justin for the idea:

-

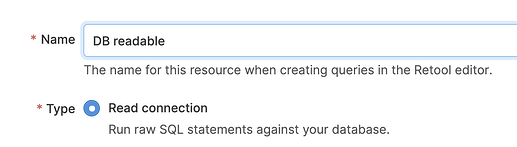

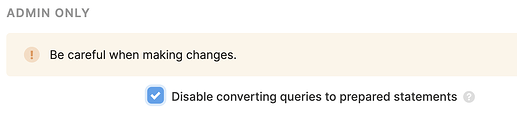

Create a read connection for the DB I want to write to and make sure you select "Disable converting queries to prepared statements" (understand the security implications of doing this)

-

Create a transformer or a JS query to reshape the data into SQL values. This is the function I added to my Organization Settings / Advanced / Preloaded Javascript and in my transformer I just did return toSqlValues(data, columnNames);:

// given

// columnNames = [ "id", "post_id", "student_username", "requested_at", "actual_role"];

// arrayOfObjects = [

// {id: "1499_129",post_id: 12594,student_username: "o'henry",requested_at: "2020-01-14T04:39:42.983Z",actual_role: null},

// {id: "1499_129", post_id: 12594, student_username: "o'henry", requested_at: "2020-01-14T04:39:42.983Z", actual_role: null},

// ];

//

// returns

// "('1499_129',12594,'o''henry','2020-01-14T04:39:42.983Z',null), ('1499_129',12594,'o''henry','2020-01-14T04:39:42.983Z',null)"

window.toSqlValues = function (arrayOfObjects, columnNames) {

return arrayOfObjects

.map((rowObj) => {

const rowArray = columnNames.map((columnName) => rowObj[columnName]);

return JSON.stringify(rowArray)

.replace(/\[([^\]]+)\]/, "($1)")

.replace(/'/g, "''")

.replace(/"/g, "'");

})

.join(", ");

};

- Create a query for your readable DB and write the UPSERT statement. You could do the whole thing in JS as well, but I wanted to keep it separate and just interpolate the values:

INSERT INTO

class_attendance (

id,

topic_id,

student_id,

student_username

)

VALUES {{ myTransformer.value }} ON CONFLICT (id) DO UPDATE

SET

topic_id = excluded.topic_id,

student_id = excluded.student_id,

student_username = excluded.student_username;

- Assign this query to a button and run it. I was able to UPSERT 2000 rows in 3 seconds instead of timing out with the default bulk upsert query.

If anyone (@alex-w, @justin) can recommend a better way of doing this, I'm all ears.