I was wondering if there were any plans (or if a FR could be made) to connect Retool Agents with User Tasks so that any intermediate steps made by an Agent could be interrupted for human oversight.

I've started seeing 'Human-in-the-Loop' Patterns across all parts of any Agentic/AI software development cycle (cycle? idk if something continuous and non-ending has a cycle but whatever)... the process ![]() , like with

, like with

- Encord for

model training - LangGraph/LangChain for

LLM applications/Model consumption- small side note, you can use langchain-postgresql to connect to your Retool DB but you might need to use an older version that better supports PGVector and not PGVectorStore unless Retool updates the extension I guess)

- Credo AI for

AI Governance.

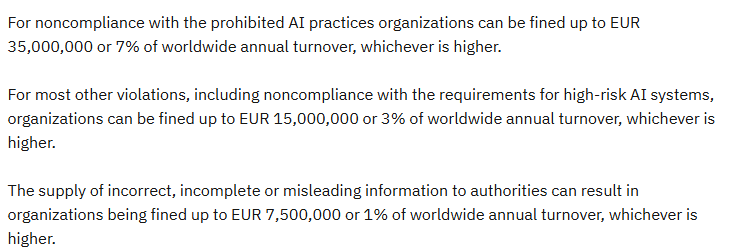

IBM even got in on it and wrote a nice article about HITL pointing out a rather valid point: regulations ![]()

![]() .

.

Some AI regulations mandate certain levels of HITL. For example, the EU AI Act’s Article 14 says that “High-risk AI systems shall be designed and developed in such a way, including with appropriate human-machine interface tools, that they can be effectively overseen by natural persons during the period in which they are in use.”

I feel sooo bad for anybody in the EU that isn't aware of these regulations (and for any engineer that has to implement compliance and PRAY they did it right for their entire foreseeable employed future ![]() )

)

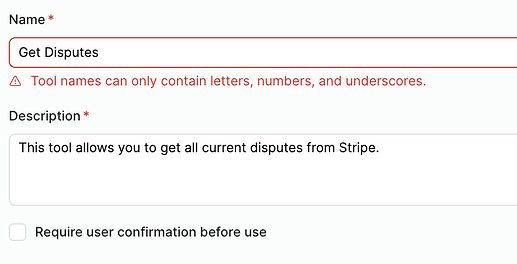

We can currently do this :

- if we implement our own MCP Server (probably the cheaper of the 2 solutions but also the most complex)

- or with workflows, but only before or after an Agent runs... unless we introduce, in the worst case exponentially, growing complexity and costs into the process by splitting each Agent into at least 2 seperate Agents (one for generating a plan, then another for executing) giving us the ability to use a User Task in-between planning and execution for HITL. While it's not the exact same as we'd get by interrupting the pipeline, the end-results can be the same (I'm no lawyer, but I'd 100% be tempted to call that good enough for compliance.... as long as I'm not in the EU

) but you're forced to at a minimum to double the number of calls to an LLM

) but you're forced to at a minimum to double the number of calls to an LLM