Hi All,

My goals is to analyze data from a table component via an AI chat component. Questions like, what is the highest value, average value, any exceptions, deviations, conclusions etc.

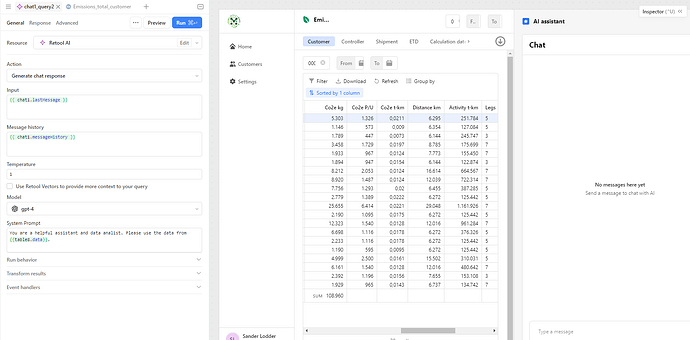

I have a table component that loads data from an sql query.

I have a chat component that refers to the table data.

I use my own Open AI API key.

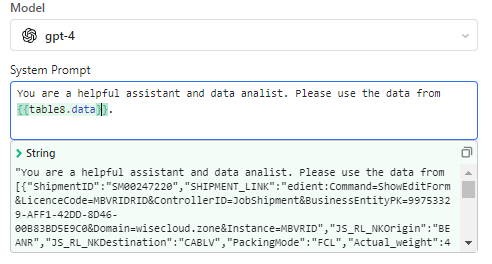

This is how I setup my chat component. (it refers to {{table8.data}})

This works okay, but it creates a very large token count in the Open AI API

prompt. A simple prompt like 'What is the highest value' will result in tens of thousands tokens because it pull all the data from the table for every prompt.

This will result in high API costs.

What is the best way to manage this or workaround to have lesser tokens usage? Or is it simply not advised to use this method?

Thanks,

Sander