Hello everyone,

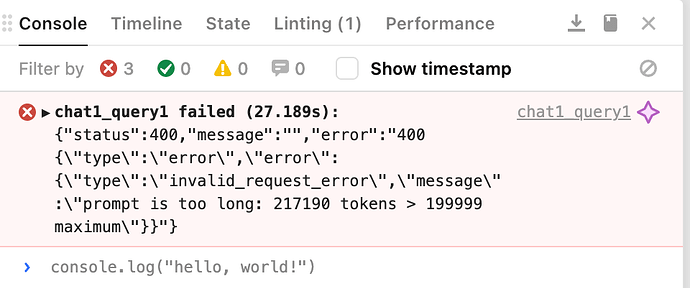

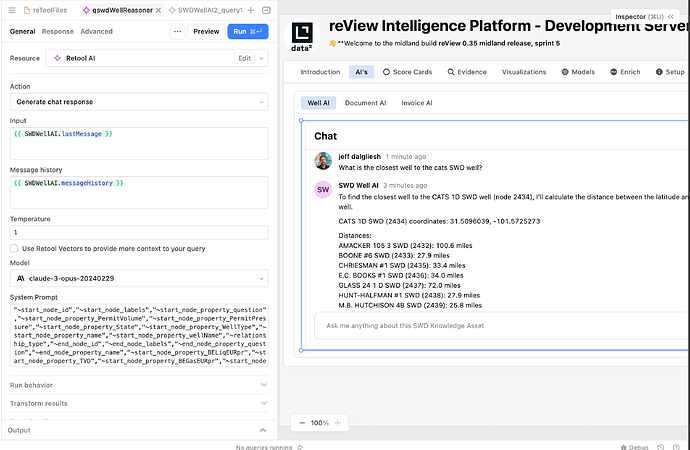

I am building an app that uses a neo4j knowledge graph to ground the LLM in reasoning about our clients dataset. Basically we pass the knowledge graph in as part of the system prompt (as text). We are using the Anthropic claude opus model which can handle 200,000 token context windows. Retool is erroring out when we make the LLM call saying we are sending too many tokens. (we are not using vectors)

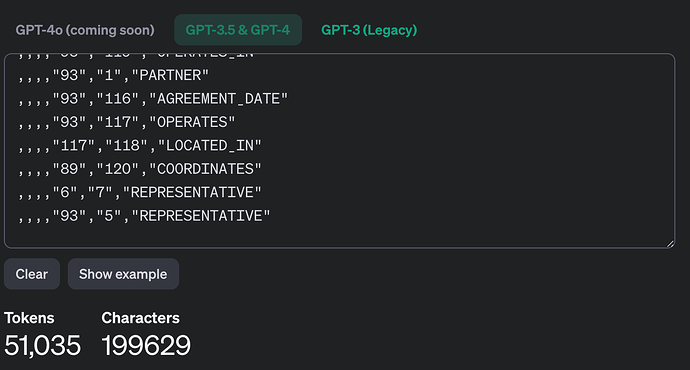

When I paste the same system message into the openAI token counter, it says there are only 51,022 Tokens or 199549 Characters. Well below the limit of 200K.

Anyone else seeing this? Seems like a bug.

Hello @jeff_dalgliesh!

That is odd, as you seem to be well below the limit. I can check with our AI team and see if we can help troubleshoot.

How are you sending the 50k tokens? If you can share a screenshot of the chat1_query1 I might be able to get a better idea of why the "prompt is too long" error is coming back.

I am guessing you don't get the same error if you were to put the 50k tokens directly into your neo4j knowledge graph/Anthropic claude opus model?

One guess could be that retool might be set up for using vectors/uploading tokens to vectors to then pass to the model but I can check our docs and ask the team to find out more!

I'm sure you've thought of this, but figured I should double check just in case, did you make sure neo4j parses tokens the same way as openAI? I'm not 100% sure how it works but it's more akin to syllable count instead of what's generally thought of as a token, for coders anyway... i have 0% clue how neo4j does this though so I'ma have to defer to you for that

2 Likes

I was putting all of the text into the system prompt with a copy and paste for now (i will update this later to use the results of a subgraph query but for now I was just pasting the sub-graph text into the System Prompt text box on the chat ). I pasted the same sub-graph text into the token openAI counter and it only counted 51K. I will check it out again later this week, but we worked around it by implementing our own chatbot.

1 Like

Glad you were able to find a work around!

I was looking into the error message and it seems that it was not coming from Retools but likely from Claude/neo4j.

To Bob's point there must be a discrepancy with the counters between openAI's token counter and Claude/neo4j which was somehow putting you at 217190(from the error message pic).

Let me know if you come up with any other insights that would help helpful to share!

2 Likes